Additional protocol containers

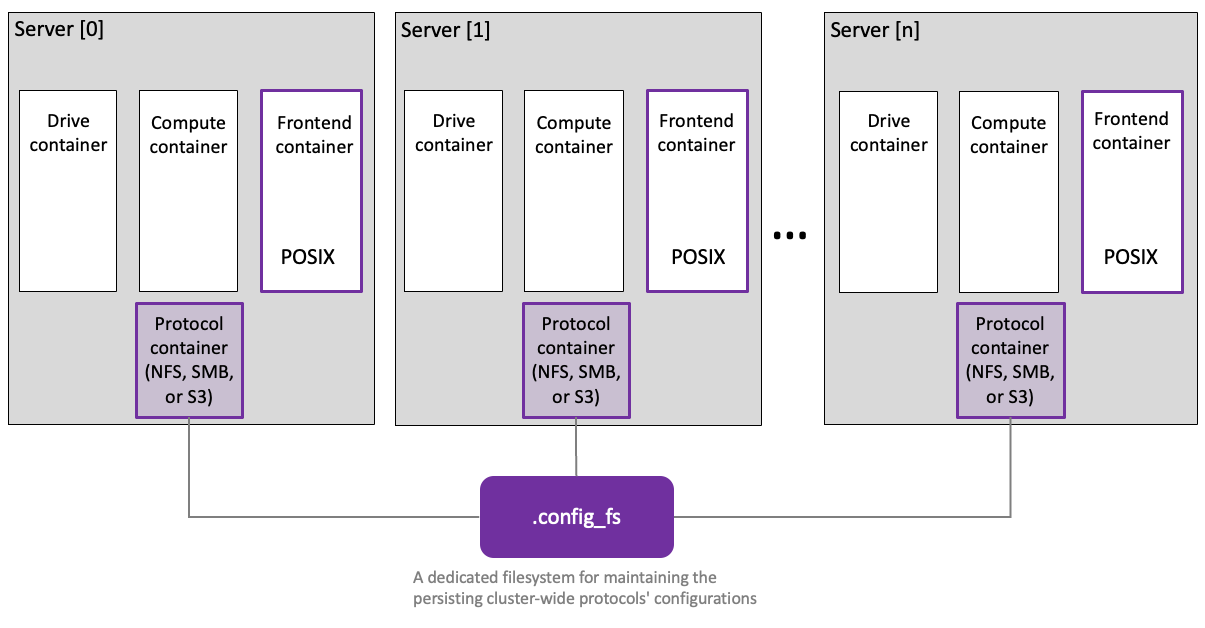

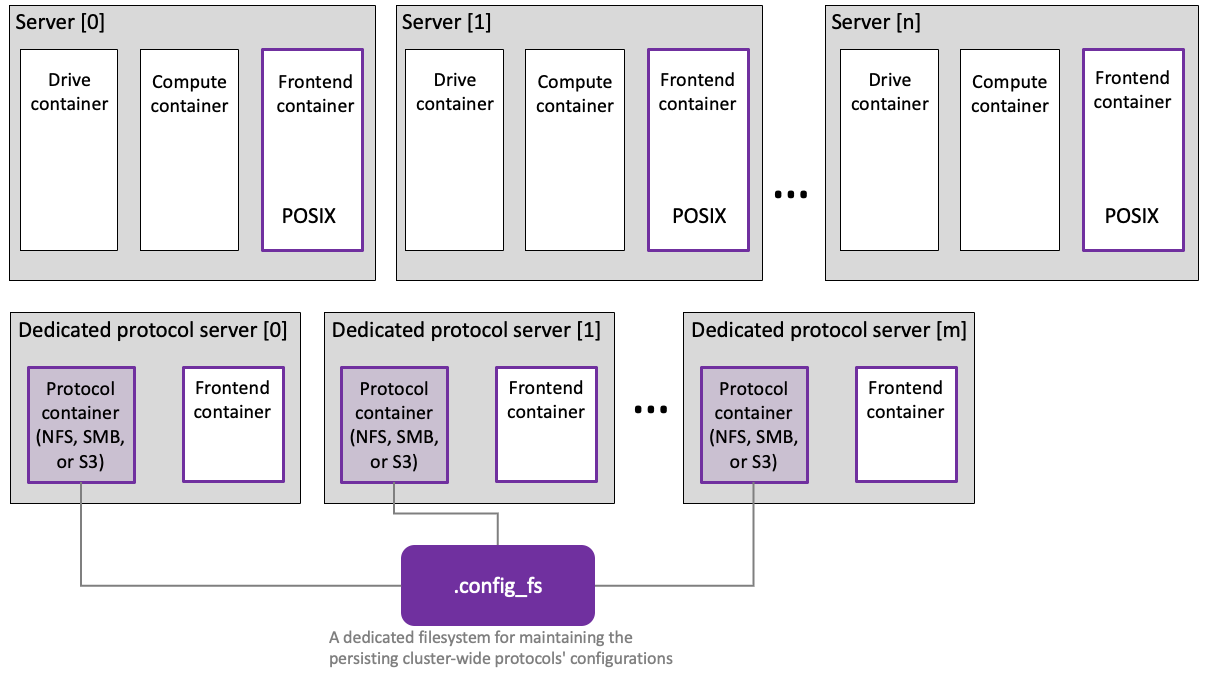

In a WEKA cluster, the frontend container provides the default POSIX protocol, serving as the primary access point for the distributed filesystem. You can also define protocol containers for NFS, SMB, and S3 clients.

To configure protocol containers, you have two options for creating a cluster for the specified protocol:

Set up protocol services on existing backend servers.

Prepare additional dedicated servers for the protocol containers.

In cloud environments, setting up protocol services on existing backend servers (option 1) is not supported. Instead, use option 2 and prepare additional dedicated servers (protocol gateways) when creating the main.tf file.

For more details, refer to the relevant deployment section:

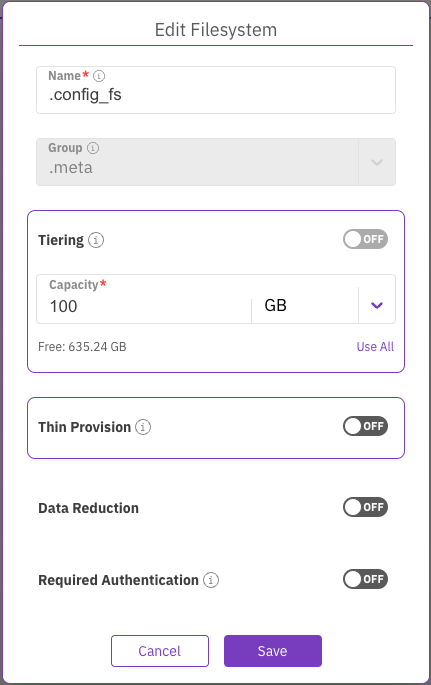

Dedicated filesystem requirement for cluster-wide persistent protocol configurations

A dedicated filesystem is required to maintain persistent protocol configurations across a cluster. This filesystem is pivotal in orchestrating coherent, synchronized access to files from multiple servers. It is recommended that this configuration filesystem be named with a significant name, for instance, .config_fs. The total capacity must be 100 GB while refraining from employing additional features such as tiering and thin-provisioning.

When establishing a Data Services container for background tasks, it is recommended to increase the .config_fs size to 122 GB (an additional 22 GB on top of the initial 100 GB). For further details, see Set up a Data Services container for background tasks.

.config_fs setting example

Related topics

Add a filesystem group (a prerequisite for creating a filesystem using the GUI)

Create a filesystem (using the GUI)

Create a filesystem (using the CLI)

Set up protocol containers on existing backend servers

With this option, you configure the existing cluster to provide the required protocol containers. The following topics guide you through the configuration for each protocol:

Prepare dedicated protocol servers

Dedicated protocol servers enhance the cluster's capabilities and address diverse use cases. Each dedicated protocol server in the cluster can host one of these additional protocol containers alongside the existing frontend container.

These dedicated protocol servers function as complete and permanent members of the WEKA cluster. They run essential processes to access WEKA filesystems and incorporate switches supporting the protocols.

Dedicated protocol servers offer the following advantages:

Optimized performance: Leverage dedicated CPU resources for tailored and efficient performance, optimizing overall resource usage.

Independent protocol scaling: Scale specific protocols independently, mitigating resource contention and ensuring consistent performance across the cluster.

Procedure

Install the WEKA software on the dedicated protocol servers: Do one of the following:

Follow the default method as specified in Manually install OS and WEKA on servers.

Use the WEKA agent to install from a working backend. The following commands demonstrate this method:

Create the WEKA container for running protocols: The dedicated protocol servers must be flagged as permanent members of the WEKA cluster that can execute protocols. Although a backend typically fulfills this role, you can create containers on protocol servers with specified options using the following command example:

Configure dedicated protocol servers for optimal performance

The execution of the setup command results in the creation of a local container named frontend0, providing access to the WEKA filesystems. Similar to setting up a backend container, this command necessitates specifying parameters such as cores and net options.

While the example above illustrates using in-kernel UDP networking for simplicity, dedicated networking (DPDK) is strongly recommended for enhanced performance.

Specify the DPDK networking using a flag similar to --net=eth1/192.168.114.XXX/24. As with other DPDK interfaces in WEKA, an interface specified here is claimed by WEKA's DPDK implementation, making it unavailable to the Linux kernel for communication.

Ensure adequate network interfaces are available on your dedicated protocol servers, particularly if you intend to dedicate NICs to WEKA. This precaution ensures a smooth and optimized configuration aligning with WEKA's performance recommendations.

Check the dedicated protocol servers: The servers join the cluster and can be verified using the command:

With dedicated protocol servers in place, the next step is to manage individual protocols.

Related topics

Last updated