WEKA Operator day-2 operations

Manage hardware, scale clusters, and optimize resources to ensure system stability and performance.

WEKA Operator day-2 operations maintain and optimize WEKA environments in Kubernetes clusters by focusing in these core areas:

Observability and monitoring

Scraping and visualizing metrics with standard Kubernetes monitoring tools such as Prometheus and Grafana.

Hardware maintenance

Component replacement and node management

Hardware failure remediation

Cluster scaling

Resource allocation optimization

Controlled cluster expansion

Cluster maintenance

Configuration updates

Pod rotation

Token secret management

WekaContainer lifecycle management

Administrators execute both planned maintenance and emergency responses while following standardized procedures to ensure high availability and minimize service disruption.

Observability and monitoring

Starting with version v1.7.0, the WEKA Operator exposes health and performance metrics for WEKA clusters, including throughput, CPU utilization, IOPS, and API requests. These metrics are available by default and can be collected and visualized using standard Kubernetes monitoring tools such as Prometheus and Grafana. No additional installation flags or custom Prometheus configurations are required.

Related topic

Monitor WEKA clusters in Kubernetes with Prometheus and Grafana

Hardware maintenance

Hardware maintenance operations ensure cluster reliability and performance through systematic component management and failure response procedures. These operations span from routine preventive maintenance to critical component replacements.

Key operations:

Node management

Graceful and forced node reboots.

Node replacement and removal.

Complete rack decommissioning procedures.

Container operations

Container migration from failed nodes.

Container replacement on active nodes.

Container management on denylisted nodes.

Storage management

Drive replacement in converged setups.

Storage integrity verification.

Component failure recovery.

Each procedure follows established protocols to maintain system stability and minimize service disruption during maintenance activities. The documented procedures enable administrators to execute both planned maintenance and emergency responses while preserving data integrity and system performance.

Before you begin

Before performing any hardware maintenance or replacement tasks, ensure you have:

Administrative access to your Kubernetes cluster.

SSH access to the cluster nodes.

kubectlcommand-line tool installed and configured.Proper backup of any critical data on the affected components.

Required replacement hardware (if applicable).

Maintenance window scheduled (if required).

Perform standard verification steps

This procedure describes the standard verification steps for checking WEKA cluster health. Multiple procedures in this documentation refer to these verification steps to confirm successful completion of their respective tasks.

Procedure

Log in to the wekacontainer:

Check the WEKA cluster status:

Check cluster containers.

Check the WEKA filesystem status.

Verify the status of the WEKA cluster processes is UP.

Check all pods are up and running.

Force reboot a machine

A force reboot may be necessary when a machine becomes unresponsive or encounters a critical error that cannot be resolved through standard troubleshooting. This task ensures the machine restarts and resumes normal operation.

Procedure

Phase 1: Perform standard verification steps.

Phase 2: Cordon and evict backend k8s nodes.

To cordon and evict a node, run the following commands. Replace <k8s_node_IP> with the target k8s node's IP address.

Cordon the backend k8s node:

Example:

Evict the running pods ensuring data is removed. For example, drain the backend k8s node:

Validate node status:

Verify pod statuses across namespaces:

Phase 3: Ensure the WEKA containers are marked as drained.

List the cluster backend containers. Run the following command to display the current status of all WEKA containers in the k8s nodes:

Check the status of the WEKA containers. In the command output, locate the

STATUScolumn for the relevant containers. Verify that it displaysDRAINEDfor the host and backend container.

Phase 4: Force a reboot on all backend k8s nodes.

Use the reboot -f command to force a reboot on each backend k8s node.

Example:

After running this command, the container restarts immediately. Repeat for all k8's nodes one by one in your environment.

Phase 5: Uncordon the backend k8s node and verify WEKA cluster status.

Uncordon the backend k8s node:

Example:

Access the WEKA Operator in the backend k8s node:

Verify the weka drives status:

Ensure all the pods, weka containers and the cluster is in a healthy state (Fully Protected) and IO operations are running (STARTED). Monitor the redistribution progress and alerts.

Phase 6: Cordon and drain all client k8s nodes.

To cordon and drain a node, run the following commands. Replace <k8s_node_IP> with the target k8s node's IP address.

Cordon the client k8s node to mark it as unschedulable:

Example:

Evict the the workload. For example: Drain the client k8s node to evict running pods, ensuring data is removed:

Force reboot all client nodes. Example for one client k8s node:

After the client k8s nodes are up, uncordon the client k8s node. Example for one client k8s node:

Example:

Verify that after uncordoning all client Kubernetes nodes:

All regular pods remain scheduled and running on those nodes.

All client containers within the cluster are joined and operational.

Only pods designated for data I/O operations are evicted.

Phase 7: Force a reboot on all client k8s nodes.

Use the reboot -f command to force a reboot on each client k8s node.

Example for one client k8s node:

After running this command, the client node restarts immediately. Repeat for all client nodes in your environment.

Phase 8: Uncordon all client k8s nodes.

Once the client k8s nodes are back online, uncordon them to restore their availability for scheduling workloads. Example command for uncordoning a single client k8s node:

Verify pod status across all k8s nodes to confirm that all pods are running as expected:

Validate WEKA cluster status to ensure all containers are operational:

See examples in Perform standard verification steps.

Remove a rack or Kubernetes node

Removing a rack or Kubernetes (k8s) node is necessary when you need to decommission hardware, replace failed components, or reconfigure your cluster. This procedure guides you through safely removing nodes without disrupting your system operations.

Procedure

Create failure domain labels for your nodes:

Label nodes with two machines per failure domain:

b. Label nodes with one machine per failure domain:

Apply the NoSchedule taint to nodes in failure domains:

Remove WEKA labels from the untainted node:

Configure the WekaCluster:

Create a configuration file named

cluster.yamlwith the following content:Apply the configuration:

Verify failure domain configuration:

Check container distribution across failure domains using the WEKA cluster container.

Test failure domain behavior by draining nodes which have same FD :

Reboot the drained nodes:

Monitor workload redistribution: Check that workloads are redistributed to other failure domains while nodes in one FD are down

Expected results

After completing this procedure:

Your nodes are properly configured with failure domains.

Workloads are distributed according to the failure domain configuration.

The system is ready for node removal with minimal disruption.

Troubleshooting

If workloads do not redistribute as expected after node drain:

Check node labels and taints.

Verify the WekaCluster configuration.

Review the Kubernetes scheduler logs for any errors.

Perform a graceful node reboot on client nodes

A graceful node reboot ensures minimal service disruption when you need to restart a node for maintenance, updates, or configuration changes. The procedure involves cordoning the node, draining workloads, performing the reboot, and then returning the node to service.

Procedure

Cordon the Kubernetes node to prevent new workloads from being scheduled:

Drain the node to safely evict all pods:

The system displays warnings about DaemonSet-managed pods being ignored. This is expected behavior.

Verify the node status shows as

SchedulingDisabled:

Reboot the target node:

Wait for the node to complete its reboot cycle and return to a

Readystate:

Uncordon the node to allow new workloads to be scheduled:

Verify that pods are running correctly on the node:

See examples in Perform standard verification steps.

Expected results

After completing this procedure:

The node has completed a clean reboot cycle.

All pods is rescheduled and running.

The node is available for new workload scheduling.

Troubleshooting

If pods fail to start after the reboot:

Check pod status and events using

kubectl describe pod <pod-name>.Review node conditions using

kubectl describe node <node-ip>.Examine system logs for any errors or warnings.

Replace a drive in a converged setup

Drive replacement is necessary when hardware failures occur or system upgrades are required. Following this procedure ensures minimal system disruption while maintaining data integrity.

Before you begin

Ensure a replacement drive ready for installation.

Identify the node and drive that needs replacement.

Ensure you have the necessary permissions to execute Kubernetes commands

Back up any critical data if necessary.

Procedure

List and record drive information:

List the available drives on the target node:

Identify the serial ID of the drives:

Record the current drive configuration:

Save the serial ID of the drive being replaced for later use.

Remove node label: Remove the WEKA backend support label from the target node:

Delete drive container: Delete the WEKA container object associated with the drive. Then, verify that the container pod enters a pending state and the drive is removed from the cluster.

Sign the new drive:

Create a YAML configuration file for drive signing:

Apply the configuration:

Block the old drive:

Create a YAML configuration file for blocking the old drive:

Apply the configuration:

Restore node label: Re-add the WEKA backend support label to the node:

Verify the replacement:

Check the cluster drive status:

Verify that:

The new drive appears in the cluster.

The drive status is ACTIVE.

The serial ID matches the replacement drive.

Troubleshooting

If the container pod remains in a pending state, check the pod events and logs.

If drive signing fails, verify the device path and node selector.

If the old drive remains visible, ensure the block operation completed successfully.

Maintain system stability by replacing one drive at a time.

Keep track of all serial IDs involved in the replacement process.

Monitor system health throughout the procedure.

Replace a Kubernetes node

This procedure enables systematic node replacement while maintaining cluster functionality and minimizing service interruption, addressing performance issues, hardware failures, or routine maintenance needs.

Prerequisites

Identification of the node to be replaced.

A new node prepared for integration into the cluster.

Procedure

Remove node deployment label: Remove the existing label used to deploy the cluster from the node:

List existing WEKA containers to identify containers on the node:

Delete the compute and drive containers specific to the node:

Verify container deletion:

Verify containers are in

PodNotRunningstatus.Confirm no containers are running on the old node.

Look for:

STATUScolumn showingPodNotRunning.No containers associated with the old node.

Add backend label to new node: Label the new node to support backends:

Sign drives on new node:

Create a WekaManualOperation configuration to sign drives:

Apply the configuration:

Verification steps:

Verify WEKA containers are rescheduled.

Check that new containers are running on the new node's IP.

Validate cluster status using WEKA CLI.

For details, see WEKA Operator day-2 operations.

Non-functional node replacement:

When a node becomes unresponsive or faulty, delete the non-functional node:

kubectl delete node <node-name>

Kubernetes automatically handles the following:

Detects node failure.

Removes affected containers.

Reschedules containers to available nodes.

Troubleshooting

If containers fail to reschedule, check:

Node labels

Drive signing process

Cluster resource availability

Network connectivity

Remove WEKA container from a failed node

Removing a WEKA container from a failed node is necessary to maintain cluster health and prevent any negative impact on system performance. This procedure ensures that the container is removed safely and the cluster remains operational.

Procedure: Remove WEKA container from an active node

To remove a WEKA container when the node is responsive, run the following:

Procedure: Remove WEKA container from a failed node (unresponsive)

Apply the configuration:

If the resign drives operation fails with the error "container node is not ready, cannot perform resign drives operation", set the skip flag:

Wait for the pod to enter the

Terminatingstate.

If the failed node is removed from the Kubernetes cluster, the WEKA container and corresponding stuck pod are automatically removed.

Resign drives manually

If you need to manually resign specific drives, create and apply the following YAML configuration:

Verification

You can verify the removal process by checking the WEKA container conditions. A successful removal shows the following conditions in order:

ContainerDrivesDeactivated

ContainerDeactivated

ContainerDrivesRemoved

ContainerRemoved

ContainerDrivesResigned

Replace a container on an active node

Replacing a container on an active node allows for system upgrades or failure recovery without shutting down services. This procedure ensures that the replacement is performed smoothly, keeping the cluster operational while the container is swapped out.

Procedure

Phase 1: Delete the existing container

Identify the container to be replaced:

Delete the selected container:

Phase 2: Monitor deactivation process

Verify that the container and its drives are being deactivated:

Expected status: The container shows DRAINED (DOWN) under the STATUS column.

Check the process status:

Expected status: The processes associated with the container show DOWN status.

For drive containers, verify drive status:

Look for:

Drive status changes from ACTIVE to FAILED for the affected container.

All other drives remain ACTIVE.

Phase 3: Monitor container recreation

Watch for the new container creation:

Verify the new container's integration with the cluster:

Expected result: A new container appears with UP status.

Verify the new container's running status:

Expected status: Running.

Confirm the container's integration with the WEKA cluster:

Expected status: UP.

For drive containers, verify drive activity:

Expected status: All drives display ACTIVE status.

See examples in Perform standard verification steps.

Troubleshooting

If the container remains in erminating state:

Check the container events:

Review the operator logs for error messages.

Verify resource availability for the new container.

For failed container starts, check:

Node resource availability

Network connectivity

Service status

Replace a container on a denylisted node

Replacing a container on a denylisted node is necessary when the node is flagged as problematic and impacts cluster performance. This procedure ensures safe container replacement, restoring system stability.

Procedure

Remove the backend label from the node that is hosting the WEKA container (for example, weka.io/supports-backends) to prevent it from being chosen for the new container

Delete the pod containing the WEKA container. This action prompts the WEKA cluster to recreate the container, ensuring it is not placed on the labeled node.

Monitor the container recreation and pod scheduling status. The container remains in a pending state due to the label being removed.

Expected results

The container pod enters Pending state.

Pod scheduling fails with message: "nodes are available: x node(s) didn't match Pod's node affinity/selector".

The container is prevented from running on the denied node.

Troubleshooting

If the pod schedules successfully on the denied node:

Verify the backend support label was removed successfully.

Check node taints and tolerations.

Review pod scheduling policies and constraints.

Cluster scaling

Adjusting the size of a WEKA cluster ensures optimal performance and cost efficiency. Expand to meet growing workloads or shrink to reduce resources as demand decreases.

Expand a cluster

Cluster expansion enhances system resources and storage capacity while maintaining cluster stability. This procedure describes how to expand a WEKA cluster by increasing the number of compute and drive containers.

This procedure exemplifies an expansion of a cluster with 6 compute and 6 drive containers to a cluster with 7 compute and 7 drive containers. Each driveContainer has one driveCore.

Before you begin

Verify the following:

Ensure sufficient resources are available.

Ensure valid Quay.io credentials for WEKA container images.

Ensure access to the WEKA operator namespace.

Check the number of available Kubernetes nodes using

kubectl get nodes.Ensure all existing WEKA containers are in Running state.

Confirm your cluster is healthy with

weka status.

Procedure

Update the cluster configuration by increasing container value from previous value in your YAML file:

Apply the updated configuration:

Expected results

Total of 14 backend containers (7 compute + 7 drive).

All new containers show status as UP.

Weka status shows increased storage capacity.

Protection status remains Fully protected.

Troubleshooting

If containers remain in Pending state, verify available node capacity.

Check for sufficient resources across Kubernetes nodes.

Review WEKA operator logs for expansion-related issues.

Considerations

The number of containers cannot exceed available Kubernetes nodes.

Pending containers indicate resource constraints or node availability issues.

Each expansion requires sufficient system resources across the cluster.

If your cluster has resource constraints or insufficient nodes, container creation may remain in a pending state until additional nodes become available.

Expand an S3 cluster

Expanding an S3 cluster is necessary when additional storage or improved performance is required. Follow the steps below to expand the cluster while maintaining data availability and integrity.

Procedure

Update cluster YAML: Increase the number of S3 containers in the cluster YAML file and re-deploy the configuration. Example YAML update:

Apply the changes:

Verify new pods: Confirm that additional S3 and Envoy pods are created and running. Use the following command to list all pods:

Ensure two new S3 and Envoy pods appear in the output and are in the Running state.

Validate expansion: Verify the S3 cluster has expanded to include the updated number of containers. Check the cluster status and ensure no errors are present. Use these commands for validation:

Confirm the updated configuration reflects four S3 containers and all components are operational.

Shrink a cluster

A WEKA cluster shrink operation reduces compute and drive containers to optimize resources and system footprint. Shrinking may free resources, lower costs, align capacity with demand, or decommission infrastructure. Perform carefully to ensure data integrity and service availability.

Before you begin

Verify the following:

Cluster is in a healthy state before beginning.

The WEKA cluster is operational and with sufficient redundancy.

At least one hot spare configured for safe container removal.

Procedure

Modify the cluster configuration:

Apply the updated configuration:

Verify the desired state change:

Replace <cluster-name> with your specific value.

Remove specific containers:

Identify containers to remove

Delete the compute container:

Delete the drive container:

Verify cluster stability:

Check container status.

Monitor cluster health.

Verify data protection status.

Expected results

Reduced number of active containers and related pod.

Cluster status shows Running.

All remaining containers running properly.

Data protection maintained.

No service disruption.

Troubleshooting

If cluster shows degraded status, verify hot spare availability.

Check operator logs for potential issues.

Ensure proper container termination.

Verify resource redistribution.

Limitations

Manual container removal required.

Must maintain minimum required containers for protection level.

Hot spare needed for safe removal.

Cannot remove containers below protection requirement.

Related topics

Expand and shrink cluster resources

Increase client cores

When system demands increase, you may need to add more processing power by increasing the number of client cores. This procedure shows how to increase client cores from 1 to 2 cores to improve system performance while maintaining stability.

Prerequisites

Sufficient hugepage memory (1500MiB per core).

Procedure

Update the WekaClient object configuration in your client YAML file:

AWS DPDK on EKS is not supported for this configuration.

Apply the updated client configuration:

Verify the new client core is added:

Replace <cluster-name> with your specific value.

Delete all client container pods to trigger the reconfiguration:

Replace <client-name> and <ip-address> with your specific values.

Verify the client containers have restarted and rejoined the cluster:

Look for pods with your client name prefix to confirm they are in Running state.

Confirm the core increase in the WEKA cluster using the following commands :

Verification

After completing these steps, verify that:

All client pods are in Running state.

The CORES value shows 2 for client containers.

The clients have successfully rejoined the cluster.

The system status shows no errors using

weka status.

Troubleshooting

If clients fail to restart:

Ensure sufficient hugepage memory is available.

Check pod events for specific error messages.

Verify the client configuration in the YAML file is correct.

Increase backend cores

Increase the number of cores allocated to compute and drive containers to improve processing capacity for intensive workloads.

The following procedure exemplifies increase of the computeCores and driveCores from 1 to 2 cores.

Procedure

Modify the cluster YAML configuration to update core allocation:

Apply the updated configuration:

Verify the changes are applied to the cluster configuration:

Troubleshooting

If core values are not updated after applying changes:

Verify the YAML syntax is correct.

Ensure the cluster configuration was successfully applied.

Check for any error messages in the cluster events:

Core allocation changes may require additional steps for full implementation.

Monitor cluster performance after making changes.

Consider testing in a non-production environment first.

Contact support if core values persist at previous settings after applying changes.

Cluster maintenance

Cluster maintenance ensures optimal performance, security, and reliability through regular updates. Key tasks include updating WekaCluster and WekaClient configurations, rotating pods to apply changes, and creating token secret for WekaClient.

Update WekaCluster configuration

This topic explains how to update WekaCluster configuration parameters to enhance cluster performance or resolve issues.

You can update the following WekaCluster parameters:

AdditionalMemory (spec.AdditionalMemory)

Tolerations (spec.Tolerations)

RawTolerations (spec.RawTolerations)

DriversDistService (spec.DriversDistService)

ImagePullSecret (spec.ImagePullSecret)

Upgrade WEKA cluster version (spec.image)

After completing each of the following procedures, all pods restart within a few minutes to apply the new configuration.

Procedure: Update AdditionalMemory

Open your cluster.yaml file and update the additional memory values:

Apply the updated configuration:

Delete the WekaContainer pods:

Verify that the memory values have been updated to the new settings.

Procedure: Update Tolerations

Open your cluster.yaml file and update the toleration values:

Apply the updated configuration:

Delete all WekaContainer pods:

Procedure: Update DriversDistService

Open your cluster.yaml file and update the DriversDistService value:

Apply the updated configuration:

Delete the WEKA driver distribution pods:

Procedure: Update ImagePullSecret

Open your cluster.yaml file and update the ImagePullSecret value:

Apply the updated configuration:

Delete all WekaContainer pods:

Procedure: Upgrade WEKA cluster version

Open your cluster.yaml file and update the WEKA cluster image value:

Apply the updated configuration:

Troubleshooting

If pods do not restart automatically or the new configuration is not applied, verify:

The syntax in your cluster.yaml file is correct.

You have the necessary permissions to modify the cluster configuration.

The cluster is in a healthy state.

Update WekaClient configuration

This topic explains how to update WekaClient configuration parameters to ensure optimal client interactions with the cluster.

You can update the following WekaClient parameters:

DriversDistService (spec.DriversDistService)

ImagePullSecret (spec.ImagePullSecret)

WekaSecretRef (spec.WekaSecretRef)

AdditionalMemory (spec.AdditionalMemory)

UpgradePolicy (spec.UpgradePolicy)

DriversLoaderImage (spec.DriversLoaderImage)

Port (spec.Port)

AgentPort (spec.AgentPort)

PortRange (spec.PortRange)

CoresNumber (spec.CoresNumber)

Tolerations (spec.Tolerations)

RawTolerations (spec.RawTolerations)

Upgrade WEKA client version (spec.image)

After completing each of the following procedures, all pods restart within a few minutes to apply the new configuration.

Before you begin

Before updating any WekaClient configuration:

Ensure you have access to the client.yaml configuration file or client CRD.

Verify you have the necessary permissions to modify client configurations.

Back up your current configuration.

Ensure the cluster is in a healthy state and accessible to clients.

Procedure: Update DriversDistService

Open your client.yaml file and update the DriversDistService value:

Apply the updated configuration:

Delete the client pods:

Procedure: Update ImagePullSecret

Open your client.yaml file and update the ImagePullSecret value:

Apply the updated configuration:

Delete the client pods:

Procedure: Update Additional Memory

Open your client.yaml file and update the additional memory values:

Apply the updated configuration:

Delete the client pods:

Procedure:Update Tolerations

Open your client.yaml file and update the toleration values:

Apply the updated configuration:

Delete the client pods:

Procedure: Update WekaSecretRef

Open your client.yaml file and update the WekaSecretRef value:

Apply the updated configuration:

Delete the client pods:

Procedure: Update Port Configuration

This procedure demonstrates how to migrate from specific port and agentPort configurations to a portRange configuration for Weka clients.

Deploy the initial client configuration with specific ports:

Apply the initial configuration:

Verify the clients are running with the initial port configuration:

Update the client YAML by removing the port and agentPort specifications and adding portRange:

Apply the updated configuration:

Delete the existing client container pods to trigger reconfiguration:

Replace

<client-name>and<ip-address>with your specific values.Verify that the pods have restarted and rejoined the cluster:

Procedure: Update CoresNumber

Open your client.yaml file and update the CoresNumber value:

Apply the updated configuration:

Delete the client pods:

Procedure: Upgrade WEKA client version

Open your client.yaml file and update the WEKA client image value:

Apply the updated configuration:

Troubleshooting

If pods do not restart automatically or the new configuration is not applied, verify:

The syntax in your client.yaml file is correct.

You have the necessary permissions to modify the client configuration.

The cluster is in a healthy state and accessible to clients.

The specified ports are available and not blocked by network policies.

Rotate all pods when applying changes

Rotating pods after updating cluster configuration ensures changes are properly applied across all containers.

Procedure

Apply the updated cluster configuration:

Delete all container pods and verify that all pods restart and reach the Running state within a few minutes. In the following commands replace the * with the actual container names.

Delete the drive pods:

Delete the S3 pods:

Delete the envoy pods:

Verification

Monitor pod status until all pods return to Running state:

Verify the configuration changes are applied by checking pod resources:

Expected results

All pods return to Running state within a few minutes.

Resource configurations match the updated values in the cluster configuration.

No service disruption during the rotation process.

Pods automatically restart after deletion.

The system maintains availability during pod rotation.

Wait for each set of pods to begin restarting before proceeding to the next set.

Create token secret for WekaClient

WekaClient tokens used for cluster authentication have a limited lifespan and will eventually expire. This guide walks you through the process of generating a new token, encoding it properly, and creating the necessary Kubernetes secret to maintain WekaClient connectivity.

Prerequisites

Access to a running WEKA cluster with backend servers

Kubernetes cluster with WEKA Operator deployed

kubectl access with appropriate permissions

Access to the

weka-operator-systemnamespace

Step 1: Generate a new join token and encode it

The join token must be generated from within one of the WEKA backend containers. Follow these steps to create a long-lived token:

List the available pods in the weka-operator-system namespace:

Connect to a backend pod and generate the token:

This command creates a token that remains valid for 52 weeks (one year). The system generates an output a JWT token similar to:

Encode the token: The generated token must be base64-encoded before use in the Kubernetes secret:

Save the base64-encoded output for use in the secret configuration.

Step 2: Create the Kubernetes secret

Option A: Using YAML template

Create a YAML file with the following template, replacing the placeholder values:

Configuration notes:

join-secret: Use the base64-encoded token from Step 2

org, username, password: Copy these values from the existing secret or create new base64-encoded values

namespace: Use

defaultor specify your target namespace

Option B: Copy from existing secret

To preserve existing credentials, export the current secret and modify only the token:

Edit the file to update the join-secret field with your new base64-encoded token.

Step 3: Apply the secret

Deploy the new secret to your Kubernetes cluster:

Verify the secret creation:

Example

In the following example, the secret is created in the default name space with name new-weka-client-secret-cluster1

The following yaml file creates a new client with the name new-cluster1-clients in the default name space using the new-weka-client-secret-cluster1 secret.

Step 4: Update WekaClient configuration

Remove any existing client instances and ensure no pods are actively using WEKA storage on the target node.

Remove active workloads

Identify pods using Weka on the target node:

Stop workloads using Weka storage:

Remove existing WekaClient

List current WekaClient instances:

Delete the existing client:

Deploy new WekaClient

Create a new WekaClient configuration that references your updated secret:

Apply the configuration:

Step 5: Verify client status

Monitor the new WekaClient deployment:

The new client should show a Running status. CSI pods may temporarily enter CrashLoopBackOff state while the client initializes, but will recover automatically once the client is ready.

Troubleshooting

CSI Pods in CrashLoopBackOff

If CSI pods remain in a failed state after the WekaClient is running, manually restart them:

Token validation

To verify your token is working correctly, check the WekaClient logs:

Secret verification

Confirm your secret contains the correct base64-encoded values:

Best practices

Token Lifetime: Generate tokens with appropriate expiration times based on your maintenance schedule

Secret Management: Store secrets in appropriate namespaces with proper RBAC controls

Documentation: Maintain records of token generation dates and expiration times

Monitoring: Implement alerts for token expiration to prevent service disruptions

Testing: Validate new tokens in non-production environments before deploying to production

Security considerations

Limit access to token generation commands to authorized personnel only

Use namespaces to isolate secrets from different environments

Regularly rotate tokens as part of your security policy

Monitor and audit secret access and modifications

WekaContainer lifecycle management

The WekaContainer serves as a critical persistence layer within a Kubernetes environment. Understanding its lifecycle is crucial because deleting a WekaContainer, whether gracefully or forcefully, results in the permanent loss of all associated data.

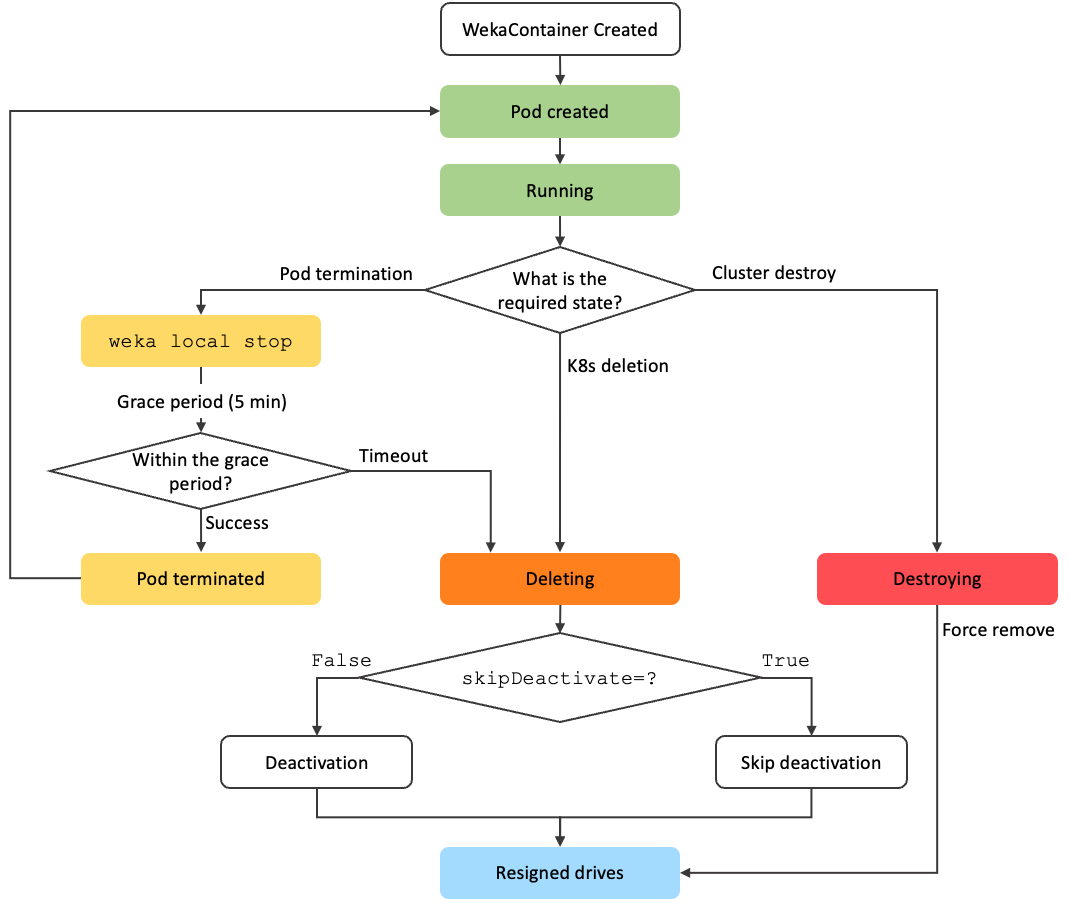

The following diagram provides a visual overview of the WekaContainer's lifecycle in Kubernetes, illustrating the flow from creation through running states and the various paths taken during deletion. The subsequent sections elaborate on the specific states, processes, and decision points shown.

Key deletion states

The deletion process involves two primary states the container can enter:

Deleting: This state signifies a graceful shutdown process triggered by standard Kubernetes deletion or pod deletion timeouts. It involves the controlled Deactivation sequence shown in the diagram before the container is removed.Destroying: This state represents a forced, immediate removal, bypassing the deactivation steps. As the diagram shows, this is typically triggered by a Cluster destroy event.

Deletion triggers and paths

The specific path taken upon deletion depends on the trigger:

Kubernetes resource deletion: When a user deletes the WekaContainer custom resource directly (for example,

kubectl delete wekacontainer...), Kubernetes initiates the process leading to theDeletingstate, starting the graceful deactivation cycle.Pod termination (user-initiated or node drain): As shown in the Pod termination path, if the specific Pod hosting the WekaContainer is terminated, while the

WekaContainerCustom Resource (CR) still exists (for example, due to node failure, eviction, or directkubectl delete pod):Kubernetes first attempts to gracefully stop the Weka process within that pod using

weka local stop, allowing a 5-minute grace period.If successful, the process stops cleanly. If

weka local stoptimes out or fails, the specific Weka container instance tied to that terminating pod may transition to theDeletingstate (as per the diagram) to ensure proper deactivation and removal from the Weka cluster's perspective (leading to data loss for that instance).Important: Because the

WekaContainerCR itself has not been deleted and still defines the desired state, the WEKA Operator detects that the required pod is missing. Consequently, the Operator automatically attempts to create a new pod to replace the terminated one, aiming to bring the system back to the Running state defined by the CR. This new pod starts fresh.

Cluster destruction: A cluster destroy operation does not immediately transition containers to the Destroying state. By default, WekaCluster uses a graceful termination period (

spec.gracefulDestroyDuration, set to 24 hours). When the WekaCluster custom resource is deleted, WekaContainers first enter a Paused state (pods are terminated), but the containers and their data remain intact. After the graceful period ends, containers transition to the Destroying state for forced removal, bypassing any graceful shutdown attempts.

The deactivation process (graceful deletion)

When a WekaContainer follows the path into the Deleting state, it undergoes the multi-step Deactivation process shown before drives are resigned. This sequence ensures safe removal from the WEKA cluster and includes:

Cluster deactivation.

Removal from the S3 cluster (if applicable).

Removal from the main WEKA cluster.

Skipping deactivation: By setting

overrides.skipDeactivate=true, you can bypass the deactivation steps and route the flow directly to Resigned drives. However, this is considered unsafe.

Drive management

Regardless of whether the path taken was Deleting (with or without deactivation) or Destroying, the process ends with the storage drives being resigned. This makes them available for reuse.

Health state and replacement

In this flow diagram, it's crucial to understand that WekaContainers in the Deleting or Destroying states are deemed unhealthy. This informs Kubernetes and the WEKA operator that the container is non-functional, typically prompting replacement attempts based on the deployment configuration. However, that data from the deleted container is permanently lost.

Last updated