WEKA and Slurm integration

Explore the architecture and configuration of an HPC cluster using the Slurm workload manager for job scheduling and WEKA as the high-performance data platform.

Overview

Traditional high-performance computing (HPC) clusters consist of login nodes, controllers, compute nodes, and file servers.

The login nodes are the primary access point for users to access the cluster.

Controllers host the job scheduler or workload manager for the cluster.

Compute nodes are used for the primary execution of user jobs.

File servers typically host home, group, and scratch directories to ensure user files are accessible across the cluster’s login and compute nodes.

For customers using the WEKA Data Platform, a high-performance HPC solution that optimizes IO regardless of the data profile, managing network-attached storage with WEKA becomes practical. This simplifies filesystem management and ensures consistent performance regardless of the location where HPC applications run.

In this integration guide, explore the architecture and configuration of an HPC cluster using the Slurm workload manager for job scheduling and WEKA as the high-performance data platform. WEKA supports multi-protocol IO, enabling simultaneous data access through POSIX, NFS, SMB, S3, GPUDirect Storage, and Kubernetes CSI.

The guide begins by exploring two architecture designs for deploying WEKA with Slurm and introduces two mount-type options adaptable to either architecture. Subsequently, it delves into the resource requirements for WEKA, guiding the configuration of Slurm to isolate specialized cores and memory from user applications and reserve them for WEKA usage.

This integration guide is intended for system administrators and engineers familiar with setting up, configuring, and managing an HPC cluster equipped with the Slurm workload manager and job scheduler on bare-metal or cloud-native systems.

Architecture

WEKA

The servers in a WEKA system are members of a cluster. A server includes multiple containers running software instances called processes that communicate with each other to provide storage services in the cluster.

The processes are dedicated to managing different functions as follows:

Drive processes for SSD drives and IO to drives.

Compute processes for filesystems, cluster-level functions, and IO from clients.

Frontend processes for POSIX client access and sending IO to the compute and drive processes.

A management process for managing the overall cluster.

For more details, see Cluster architecture overview.

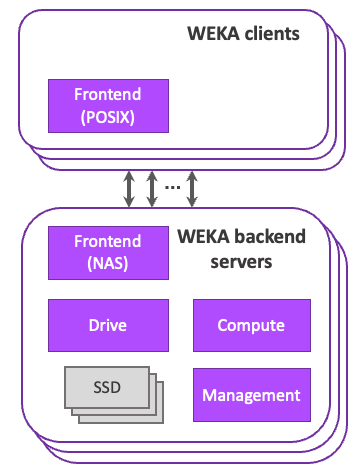

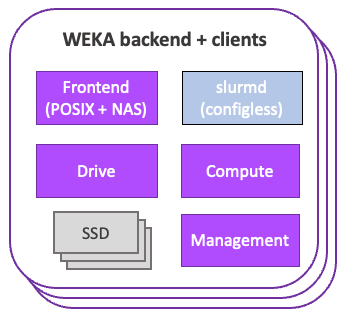

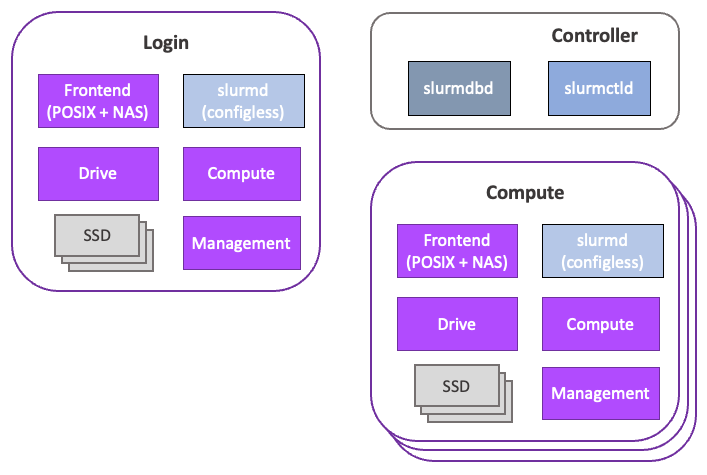

WEKA can be configured to run with dedicated backend servers and independent clients (Figure 1) or in a converged cluster, where each participating server acts as both client and backend server (Figure 2).

Dedicated backend configuration

In a dedicated backend configuration, user applications run on the WEKA clients, which interact with the operating system’s Virtual File System (VFS) layer.

The VFS uses the WEKA POSIX driver to issue requests to the WEKA client on the host. The client communicates with the WEKA backend servers. The WEKA server Frontend, Compute, and Drive processes move data between the servers and clients in parallel.

Converged configuration

In a converged configuration, each server participating in the cluster hosts user applications alongside the frontend (POSIX + NAS; client and server), drive, compute, and management processes.

WEKA processes are allocated to designated cores on each server in the WEKA cluster through control groups. This demands careful consideration to guarantee sufficient CPUs and memory for both WEKA and user applications. For an in-depth understanding of WEKA architecture, see the WEKA Architecture Technical Brief.

WEKA client mount modes

WEKA clients can be configured to mount in DPDK or UDP mode.

DPDK mode is optimized for single-process performance and must be used when possible. When using DPDK mode, specific requirements must be met by the client host system.

The Frontend process on clients uses CPU cores and memory while the mount is active. This implies that sufficient compute cores and memory resources must be available to run the WEKA Frontend process and other user applications. Additionally, NIC hardware must have a Poll Mode Driver (PMD) and be supported by WEKA. See Prerequisites and compatibility for more information on supported NIC hardware for bare-metal and cloud-native systems.

UDP mode is an option for limited-throughput WekaFS filesystem access when DPDK mode is not feasible due to network, hardware, or operating system limitations. It can serve as an alternative when necessary.

In UDP mode, the Frontend process on clients uses CPUs and memory only during IO activity. This proves advantageous for compute or memory-bound applications with sporadic file read or write operations, as seen in computational fluid dynamics.

For IO-bound applications like those in bioinformatics and AI/ML, WEKA recommends employing the DPDK mount mode.

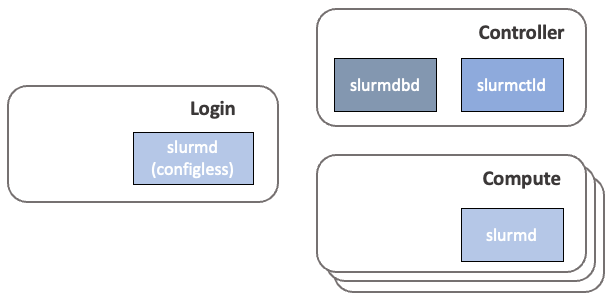

Slurm

Slurm is a robust open-source cluster management and job scheduling system tailored for Linux clusters of all sizes. Slurm delegates access to resources, provides a framework for executing and monitoring computational workloads, and manages a queue of pending work submitted by system users. Slurm manages these responsibilities through three daemons:

Slurm controller daemon (slurmctld)

Slurm database daemon (slurmdbd)

Slurmd daemon (slurmd)

Typically, a Slurm cluster consists of one or more controller hosts that run the Slurm controller and Slurm database daemons. These are a set of compute nodes where users run their workloads and one or more login nodes used to access the cluster.

Slurm cluster operation

The Slurm controller daemon (slurmctld) is a centralized workload manager that monitors available resources and active workloads. Based on resource availability and user requests, the slurmctld node determines where to run user workloads.

The Slurm database daemon (slurmdbd) is optional but a recommended service to deploy in Slurm clusters. The Slurm database is used to store job history, which can provide visibility to cluster usage and can be helpful in debugging issues with user workloads or cluster resources. The slurmctld and slurmdbd services are often deployed on the controller host.

The slurmd service runs on compute nodes, where user workloads are executed. It functions similarly to a remote shell, receiving work requests from the slurmctld, performing the tasks, and reporting back the task status.

Users typically access HPC clusters through one or more login nodes. The login nodes allow users to access shared files across the compute nodes and schedule workloads using Slurm command-line tools such as sbatch, salloc, and srun. These tools are often sufficient for lightweight text editing and code compilation and are sometimes used to transfer data between local workstations and the HPC cluster.

In some systems, like large academic research-oriented HPC data centers, dedicated “data mover” nodes are often available specifically for file transfers.

Slurm leverages control groups

Integral to Slurm's resource management and job handling is its ability to leverage control groups (cgroups) through the proctrack/cgroup plugin. Provided by the Linux kernel, cgroups allow nodes to be organized hierarchically and enable the distribution of system resources in a controlled fashion along this hierarchy.

Slurm leverages cgroups to manage and constrain resources for jobs, job steps, and tasks. For instance, Slurm ensures that a job only uses the CPU or memory resources allocated using the cpuset and memory controllers.

The proctrack/cgroup node tracker uses the freezer controller to keep track of all the node IDs associated with a job in a specific hierarchy in the cgroup tree, which can then be used to signal these node IDs when instructed (for example, when a user cancels a job).

WEKA and Slurm integration

Having covered the fundamentals of WEKA and Slurm architectures, we can now explore integrating these two systems to establish an HPC cluster. This cluster uses the Slurm workload manager for job scheduling and leverages WEKA as the high-performance data platform. Both the dedicated backend and converged configurations are considered.

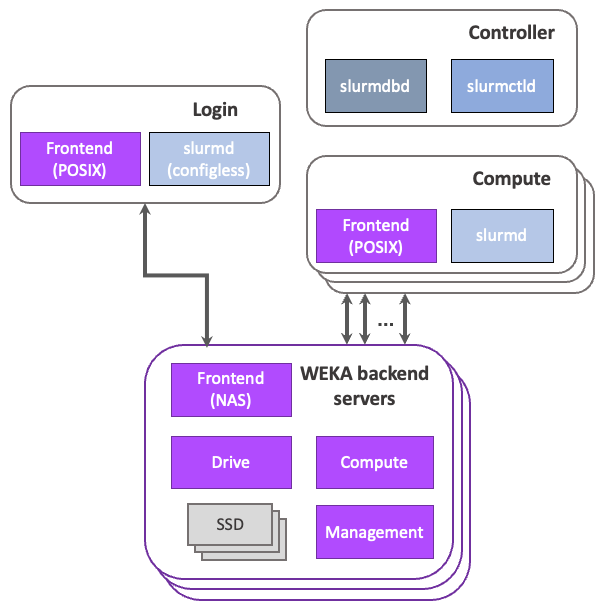

In either scenario, the Slurm login and compute nodes function as WEKA clients. The controller does not participate in the WEKA filesystem, serving neither as a backend server nor a client.

WEKA and Slurm integration in dedicated backend architecture

In the dedicated backend architecture, the WEKA filesystem is mounted on the login and compute servers, requiring a WEKA frontend process on login and compute processes for file access (Figure 4).

Servers are provisioned to provide servers with compute, network, and storage resources to run the WEKA data platform.

The login and compute nodes from the Slurm cluster mount WEKA filesystems and participate in the WEKA cluster as clients. When mounting in UDP or DPDK mode, some memory must be reserved for WEKA. To determine the amount of memory appropriate for your setup, see the Plan the WEKA system hardware requirements topic.

In UDP mount mode, the WEKA Frontend nodes can run on any available core on the login and compute nodes (WEKA clients).

In DPDK mode, at least one CPU (physical) core must be reserved for the WEKA frontend node. This can be done by one of the following options:

Specify a dedicated core in the mount options:

mount -o core=X …WhereXis the specific core ID to reserveUse non-dedicated cores:

mount -o num_cores=1 …

Mounting a dedicated core is recommended for better file IO performance than non-dedicated cores.

WEKA and Slurm integration in converged architecture

In the converged architecture, the WEKA filesystem is mounted on login and compute nodes. The login and compute servers also run the drive and compute nodes to participate in hosting the WEKA backend (Figure 5).

The controller hosts the Slurm job scheduler, while the login and compute nodes all host data as part of the WEKA data platform.

Relative to the dedicated backend architecture, the converged architecture requires additional compute and memory resources on the login and compute processes to support the drive, compute, and management processes. In converged deployments, the WEKA processes are typically allocated to specific cores using cgroups.

What's next?

To understand the potential configurations for an integrated Slurm and WEKA architecture, let's delve into configuring Slurm and WEKA. Allocating sufficient compute and memory resources specifically for WEKA is crucial.

WEKA processes are allocated to specific cores when using the DPDK mount mode or when deploying converged clusters (with UDP or DPDK mount modes). Slurm configuration is crucial to ensure proper resource allocation. Designating specialized cores in Slurm prevents conflicts between user workloads and Slurm services for resource usage.

Moreover, due to Slurm's typical configuration of control groups for allocating user workloads to specialized cores, WEKA must retain the CPUSets when initiating the WEKA agent process.

The following sections detail the required Slurm configurations for dedicated and converged backend setups, considering UDP and DPDK mount modes.

Implementation

When using a job scheduler such as Slurm with WEKA, it is essential to ensure WEKA is allocated (bound/pinned) to specific cores and ensure the job scheduler does not allocate work to the cores used by WEKA on the WEKA clients.

To prevent user jobs from running on the same cores as the WEKA agent, Slurm must be configured so that the user jobs, Slurm daemon (slurmd), and Slurm step daemon (slurmstepd) only run on specific cores.

Additionally, the available memory for jobs on each compute process must be reduced from the total available to provide sufficient memory for the server operating system and WEKA client processes.

The following sections describe the installation and relevant configurations for Slurm. The focus shifts to the necessary configurations for pinning WEKA processes to specific cores. Finally, examples of WEKA and Slurm configurations cover both dedicated backend and converged cluster architectures.

Prerequisite: Install and configure Slurm

While presuming your familiarity with Slurm installation and configuration, this section provides an overview of Slurm’s installation. It also highlights critical elements pertinent to the discussion of integrating Slurm with WEKA.

The guidance on installing and configuring Slurm, a prerequisite for integrating it with WEKA, is provided for convenience. For the most up-to-date instructions, it is recommended that you refer to the Slurm documentation.

1. Install the munge package on all instances participating in the Slurm cluster

Slurm depends on munge for authentication between Slurm services. When installing munge from a package manager, the necessary systemd service files are installed, and the munge service is enabled.

By default, this service looks for a munge key under /etc/munge/munge.key.

This key must be consistent between the controller, login, and compute nodes. You can create a munge key using the following command:

Typically, you create this key on one of the hosts (for example, the controller) and copy it to all the other instances in the cluster. Alternatively, you can mount (using NFS) /etc/munge from the controller to the login and compute nodes.

Note: To function correctly, the munge authentication requires consistency between the clocks on all instances in the Slurm cluster.

2. Install Slurm

You can install Slurm using your package manager on RHEL and Debian-based Linux operating systems.

Ensuring that the necessary packages are installed on both the controller and login instances is essential. The following are specific package requirements:

The controller instance requires packages that provide

slurmctldandslurmdbd. (On Debian-based operating systems, these packages areslurmctldandslurmdbd.)The login instance requires the Slurm client commands, such as

sinfoandsqueue. (On Debian-based operating systems, these are provided by theslurm-clientpackage.)

Consider the following guidelines:

If you plan to use a configless deployment, ensure the

slurmdpackage runs theslurmdservice on the login node. Installing Slurm packages from a package manager creates the Slurm user and installs the necessarysystemdservice files.If you plan to use slurmdbd (recommended), a SQL database hosting the Slurm database is required. Typically, MariaDB is used.

Slurmdbdis generally deployed on the Slurm controller host. You can host the MariaDB server on the Slurm controller host or a separate host accessible to theslurmdbdservice.If you require a specific version of Slurm or integrations with particular flavors of the Message Passing Interface (MPI), it is best to build Slurm from the source. In this approach, install munge and a service like MariaDB. Additionally, create the Slurm user on each instance in your cluster and set up systemd service files.

The uid for the Slurm user must be consistent with all instances in your Slurm cluster.

When deploying Slurm clusters, a standard set of configuration files is required to determine the scheduler behavior, accounting behavior, and available resources. There are four commonly used configuration files in Slurm clusters:

slurm.conf describes general Slurm configuration information, the nodes to be managed, information about how those nodes are grouped into partitions, and various scheduling parameters associated with those partitions. This file needs to be consistent across all hosts in the Slurm cluster.

slurmdbd.conf describes the configuration for the Slurm database daemon, including the database host address, username, and password. This file should be protected as it contains sensitive information (database password) and must only exist on the host running the slurmdbd service.

cgroup.conf describes the configurations for Slurm’s Linux cgroup plugin. This file needs to be consistent across all hosts in the Slurm cluster.

gres.conf describes the Generic RESource(s) (GRES) configuration on each compute node, such as GPUs. This file needs to be consistent across all hosts in the Slurm cluster.

Most configuration files must be consistent across all hosts (Controller, Login, and Compute) participating in the Slurm cluster. The following three commonly employed strategies ensure consistency of the Slurm configuration across all hosts:

Synchronize the Slurm configuration files across your cluster using tools such as

parallel-scp.Host your Slurm configuration files on the Controller and NFS mount the directory containing these files on the Login and Compute nodes.

Deploy a "Configless" Slurm where the

slurmdservice obtains the Slurm configuration from theslurmctldserver on startup. The configless option is only available for Slurm 20.02 and later.

The first strategy above requires copying the Slurm configuration files across all cluster nodes when configuration changes are needed.

The second and third strategies above require maintaining the configuration files only on the Slurm controller.

The main difference between the configless deployment and the NFS mount configuration is that the login node in the configless setup also needs to run the slurmd service.

We recommend using a configless Slurm deployment, when possible, for ease of use. This setup installs a single set of Slurm configuration files on the Slurm controller instance.

The compute and login nodes obtain their configuration through communications between the slurmd service, hosted on the compute and login nodes, and the slurmctld service, hosted on the Slurm controller.

To enable a configless Slurm deployment, set SlurmctldParameters=enable_configless in the slurm.conf file.

Additionally, start the slurmd services with the --conf-server host[:port] flag to obtain Slurm configurations from slurmctld at host[:port].

In all strategies, the configuration files are stored in the same directory. A common choice is to use /usr/local/etc/slurm (this location can vary between systems).

For non-standard locations, the slurmctld slurmd services can be launched with the -f flag to indicate the path to the slurm.conf file. Alternatively, if you build Slurm from the source, you can use the --sysconfdir=DIR option during the configuration stage of the build to set the default directory for the Slurm configuration files.

Configure Slurm and WEKA

The configuration of Slurm and WEKA includes settings for isolating CPU and memory resources dedicated to WEKA processes. This involves preventing conflicts between Slurm services (primarily slurmd) and user workloads attempting to use the same cores as the WEKA processes.

Additionally, it includes allocating exclusive cores and memory to avoid oversubscription on Slurm compute nodes.

1. Set the task/affinity and task/cgroup plugins to prevent user jobs from using the same cores as WEKA processes

task/affinity and task/cgroup plugins to prevent user jobs from using the same cores as WEKA processesSlurm offers task/affinity and task/cgroup plugins, controlling compute resource exposure for user workloads. The task/affinity plugin binds nodes to designated resources, while the task/cgroup plugin confines nodes to specified resources using the cgroups cpuset interface.

To ensure that Slurm daemons (slurmd and slurmstepd) do not run on cores designated for the WEKA agent, it is advisable to set the TaskPluginParam to SlurmdOffSpec.

Set the following in the slurm.conf file:

Set the

SelectTypeoption toselect/cons_tresto indicate that cores, memory, and GPUs are consumable by user jobs.Set the

SelectTypeParametersoption toCR_Core_Memoryto indicate that cores and memory are used explicitly for scheduling workloads.Set the

PrologFlagsoption toContainto use cgroups to contain all user nodes on their allocated resources.

The following code snippet summarizes the required settings for a slurm.conf file.

2. Allocate cores and memory for the WEKA agent

Set each compute node definition in the slurm.conf file to allocate exclusive cores and memory for the WEKA agent. The cores and memory designated for WEKA (as well as other operating system nodes) are termed Specialized Resources.

Set the following parameters in the slurm.conf file:

RealMemory: Specify the available memory on each compute node.CPUSpecList: Define a list of virtual CPU IDs reserved for system use, including WEKA nodes.MemSpecLimit: When usingSelectTypeParameters=CR_Core_Memory, specify the amount of memory (in MB) reserved for system use.

To use the CPUSpecList and MemSpecLimit parameters, ensure the following are set in the cgroup.conf file:

Example: Slurm and WEKA dedicated backend architecture with DPDK mount mode

This example uses the a2-ultragpu-8g instances on Google Cloud Platform, which have 1360 GB (1360000 MB) of available memory, 48 physical cores on two sockets with two hyperthreads per core, and 8 A100 GPUs.

Following the Plan the WEKA system hardware requirements topic, suppose we want to set aside 5 GB of memory and the last core (Core ID 47) for the WEKA Frontend node in a dedicated backend architecture using a DPDK mount mode.

In this example, we set the RealMemory to the total memory available and then set the MemSpecLimit to 5000 (MB) to set aside that amount of memory for the WEKA agent and the operating system.

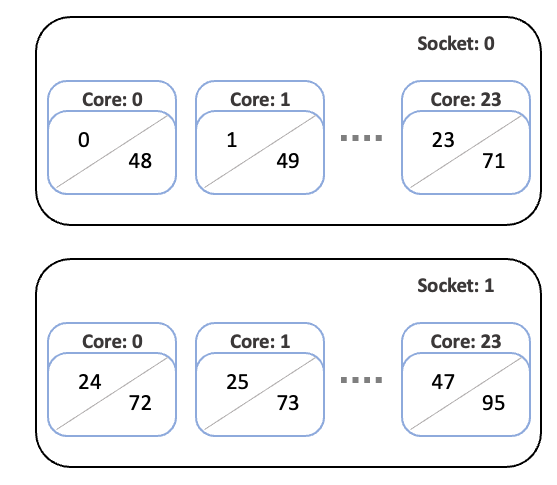

When using the select/cons_tres with CR_Core_Memory parameters in Slurm on systems with hyperthreading, the CPU IDs refer to the “processor ID” for the hyperthread, and not the physical core ID.

To determine the relationship between the processor ID and the core ID, we can use the /proc/cpuinfo file on Linux systems. This file lists properties for each processor, including its processor ID, associated core ID, and physical ID. The physical ID refers to the physical multi-core CPU chip that is plugged into a socket on the motherboard.

For example, one entry might look like the code snippet below (output is intentionally truncated). On this system, we see that core id 0 hosts processor 0, which is hosted on socket 0.

Continuing through the /proc/cpuinfo file we find a relationship between the sockets, cores, and processors that is summarized in Figure 6. Namely, the processor IDs are ordered from 0-23 on Socket 0, then 24-47 on Socket 1. Then, the second hyperthreads are numbered from 48-71 on Socket 0 and 72-95 on Socket 1.

In this example, to reserve core 47, we see that this corresponds to vCPUs 47 and 95. In the slurm.conf file, we would then set CpuSpecList=47,95.

An example of the node configuration in slurm.conf is shown below. This code snippet shows a compute node named “compute-node-0” with 96 CPUs (processors) with two sockets per board, 24 cores per socket, two threads per core, and 8 A100 GPUs.

If you are always reserving the last cores for WEKA, an alternative approach is to use the CoreSpecCount parameter in the compute node configuration in slurm.conf to specify the number of physical cores for resource specialization.

When using the CoreSpecCount parameter, the first core selected is the highest numbered core on the highest numbered socket by default (see core selection in Slurm documentation). Subsequent cores selected are the highest numbered core on lower numbered sockets. In this case, use the snippet below to reserve core 47 (processors 47 and 95) for WEKA.

To apply these changes on an existing Slurm cluster, restart the Slurm controller daemon after updating your slurm.conf and cgroup.conf files. The Slurm nodes are managed by systemd, allowing you to restart them with systemctl.

Additionally, restart the Slurm daemon on any compute nodes. On each compute node, run the command shown below. As a user with administrative privileges, you can use pdsh, xargs, or through a Slurm job. The latter option may be necessary if your cluster is configured using the pam_slurm_adopt plugin, where ssh access to compute nodes is limited to users with a job allocation.

Continuing with this example, Slurm and WEKA in a dedicated backend architecture with DPDK mount modes, we turn to necessary WEKA configurations. To reserve core 47 for the WEKA Frontend node on a compute node using a DPDK mount mode, you can use the core mount option as follows:

In this example, the NIC used for DPDK is ib0, the WEKA backend host can be acceesed at backend-host-0, the filesystem name is fs1, and the mount location on the compute node is /mnt/weka.

By default, WEKA resets cpusets, which can interfere with configurations enforced by Slurm. To prevent this, set the isolate_cpusets=false option in /etc/wekaio/service.conf and restart the weka-agent node as follows:

For more information, see Add clients to a bare-metal cluster

Last updated