Detailed deployment tutorial: WEKA on AWS using Terraform

Introduction

Deploying WEKA in AWS requires knowledge of several technologies, including AWS, , basic Linux operations, and the WEKA software. Recognizing that not all individuals responsible for this deployment are experts in each of these areas, this document aims to provide comprehensive, end-to-end instructions. This ensures that readers with minimal prior knowledge can successfully deploy a functional WEKA cluster on AWS.

Document scope

This document provides a guide for deploying WEKA in an AWS environment using Terraform. It is applicable for both POC and production setups. While no pre-existing AWS elements are required beyond an appropriate user account, the guide includes examples with some pre-created resources.

This document guides you through:

General AWS requirements.

Networking requirements to support WEKA.

Deployment of WEKA using Terraform.

Verification of a successful WEKA deployment.

Images embedded in this document can be enlarged with a single click for ease of viewing and a clearer and more detailed examination.

Terraform preparation and installation

HashiCorp Terraform is a tool that enables you to define, provision, and manage infrastructure as code. It simplifies infrastructure setup by using a configuration file instead of manual processes.

You describe your desired infrastructure in a configuration file using HashiCorp Configuration Language (HCL) or optionally JSON. Terraform then automates the creation, modification, or deletion of resources.

This automation ensures that your infrastructure is consistently and predictably deployed, aligning with the specifications in your configuration file. Terraform helps maintain a reliable and repeatable infrastructure environment.

Organizations worldwide use Terraform to deploy stateful infrastructure both on-premises and across public clouds like AWS, Azure, and Google Cloud Platform.

You can deploy WEKA in AWS using AWS CloudFormation, allowing them to choose their preferred deployment method.

To install Terraform, we recommend following the official installation guides provided by HashiCorp.

Locate the AWS Account

Access the AWS Management Console.

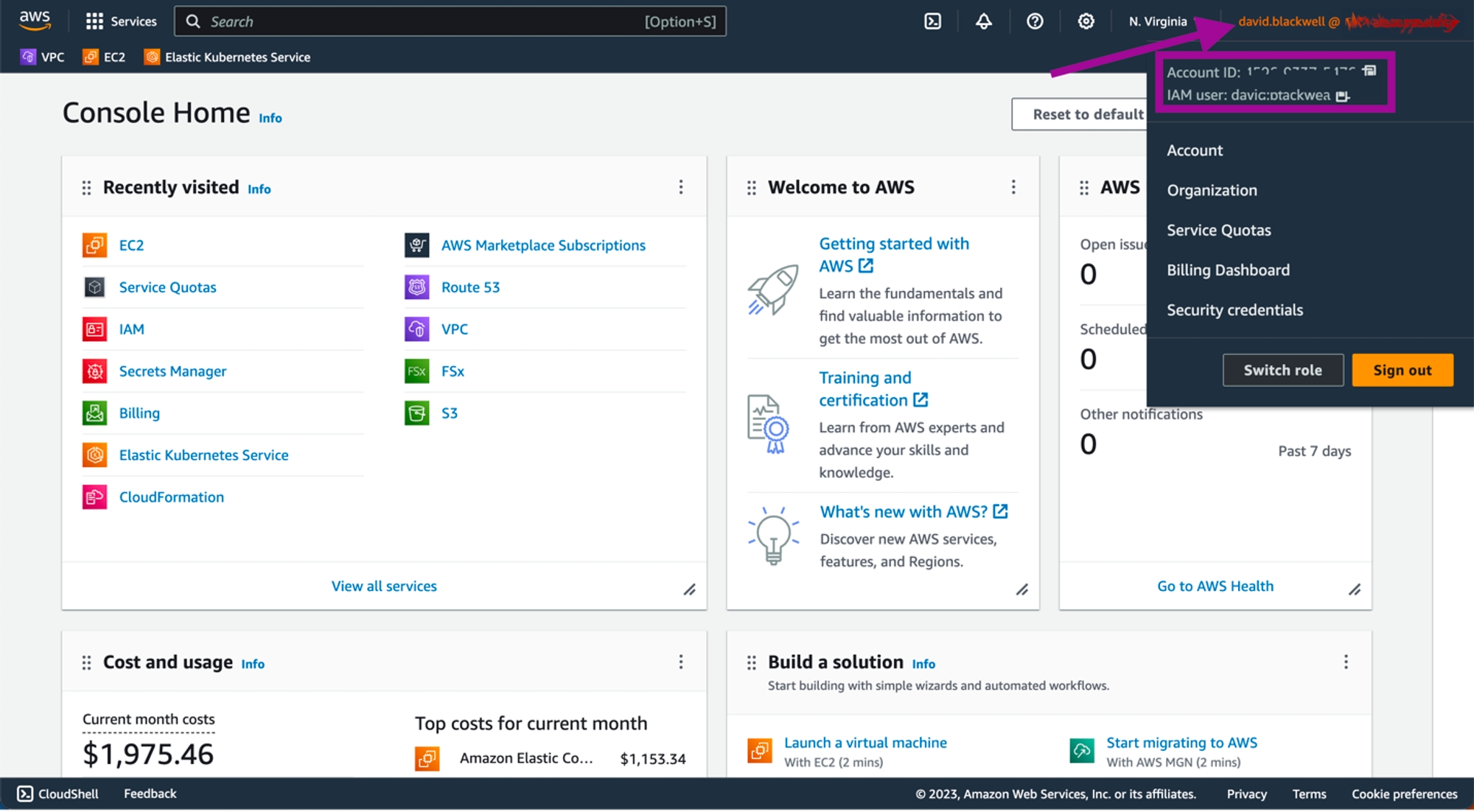

In the top-right corner, search for Account ID.

If deploying into a WEKA customer environment, ensure the customer understands their subscription structure.

If deploying internally at WEKA and you don't see the Account ID or haven't been added to the correct account, contact the appropriate cloud team for assistance.

Confirm user account permissions

To ensure a successful WEKA deployment in AWS using Terraform, verify that the AWS IAM user has the required permissions listed in Appendix B: Terraform’s required permissions examples. The user must have permissions to create, modify, and delete AWS resources as specified by the Terraform configuration files.

If the IAM user lacks these permissions, update their permissions or create a new IAM user with the necessary permissions.

Procedure

Access the AWS Management Console: Log in using the account intended for the WEKA deployment.

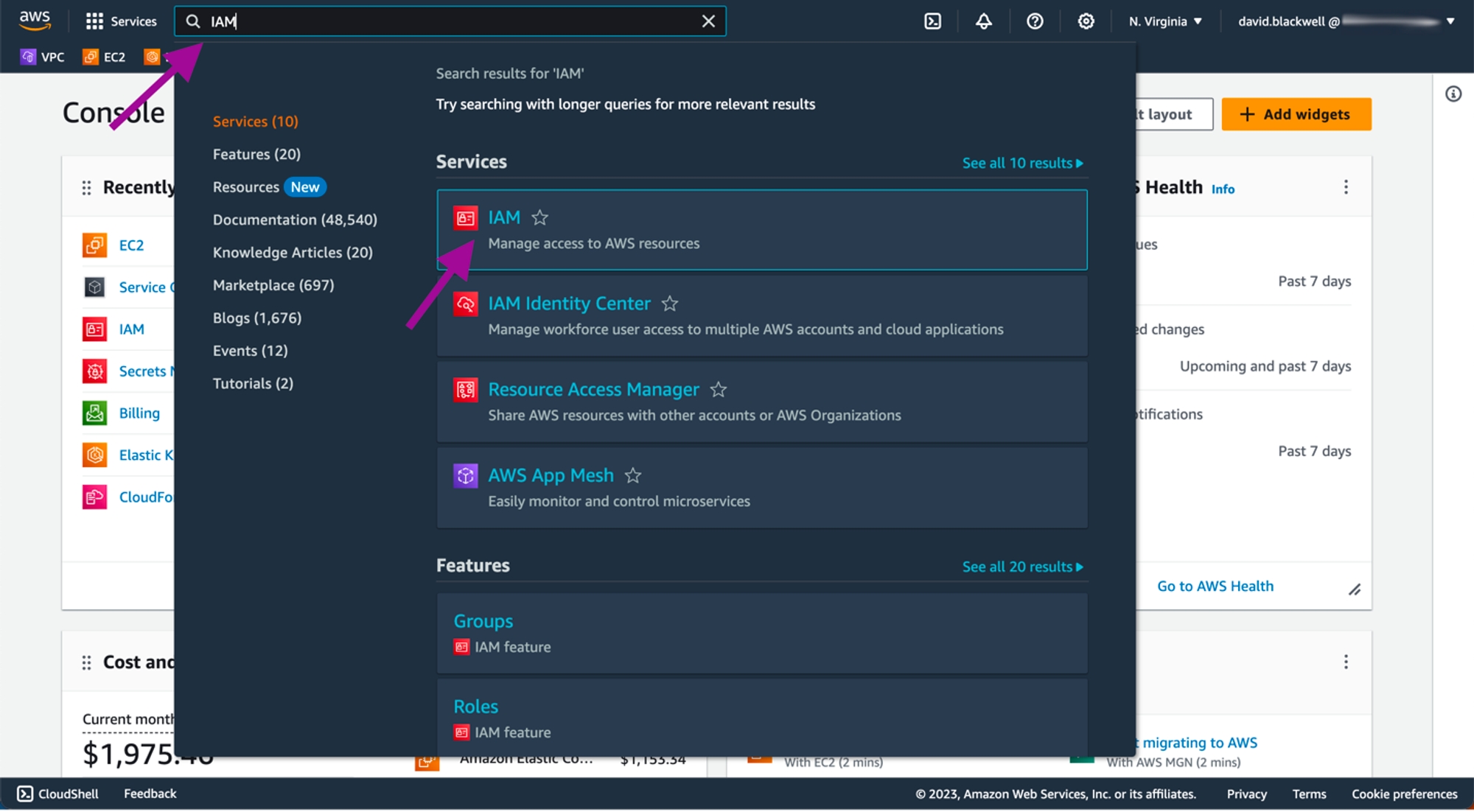

Navigate to the IAM dashboard: From the Services menu, select IAM to open the Identity and Access Management dashboard.

Locate the IAM user: Search for the IAM user or go to the Users section.

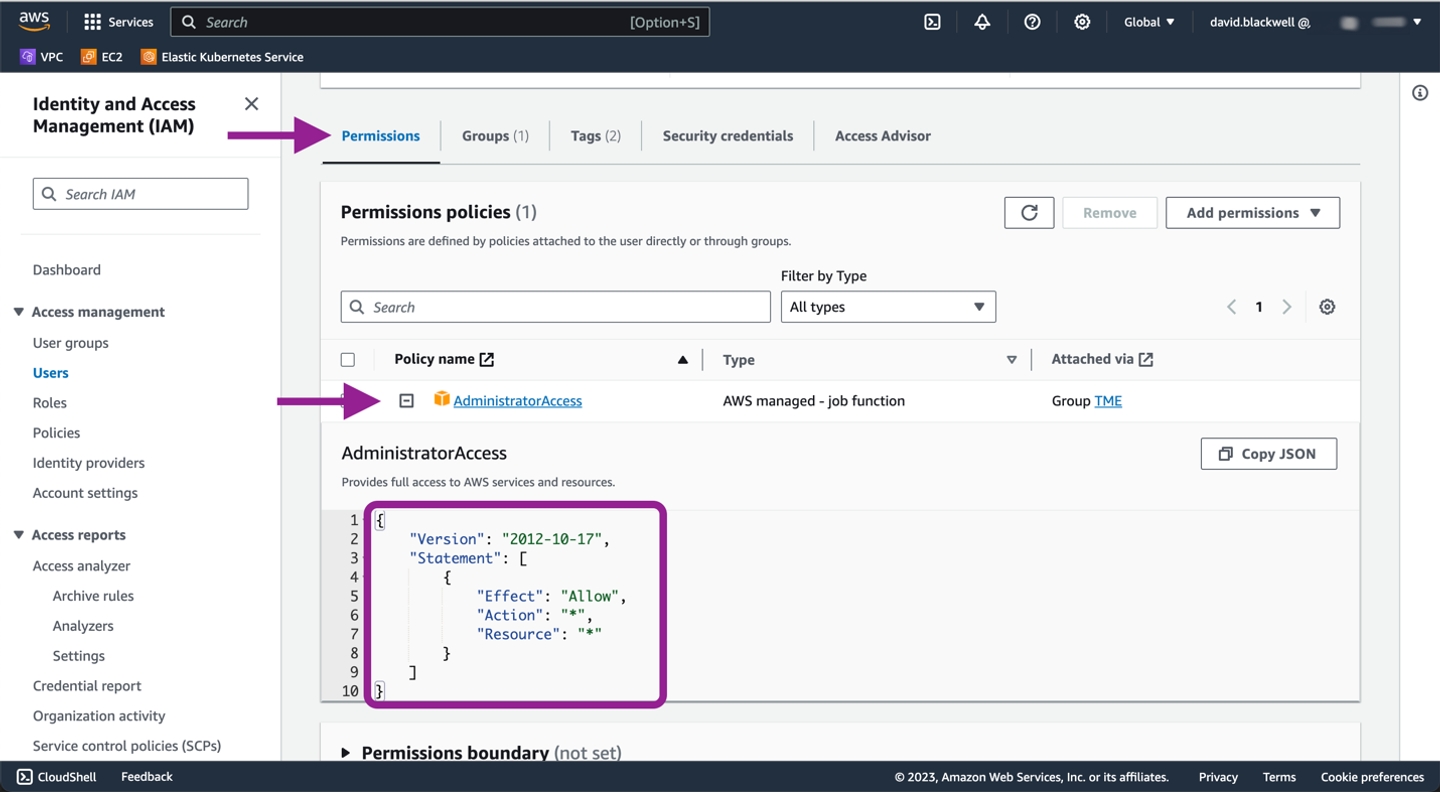

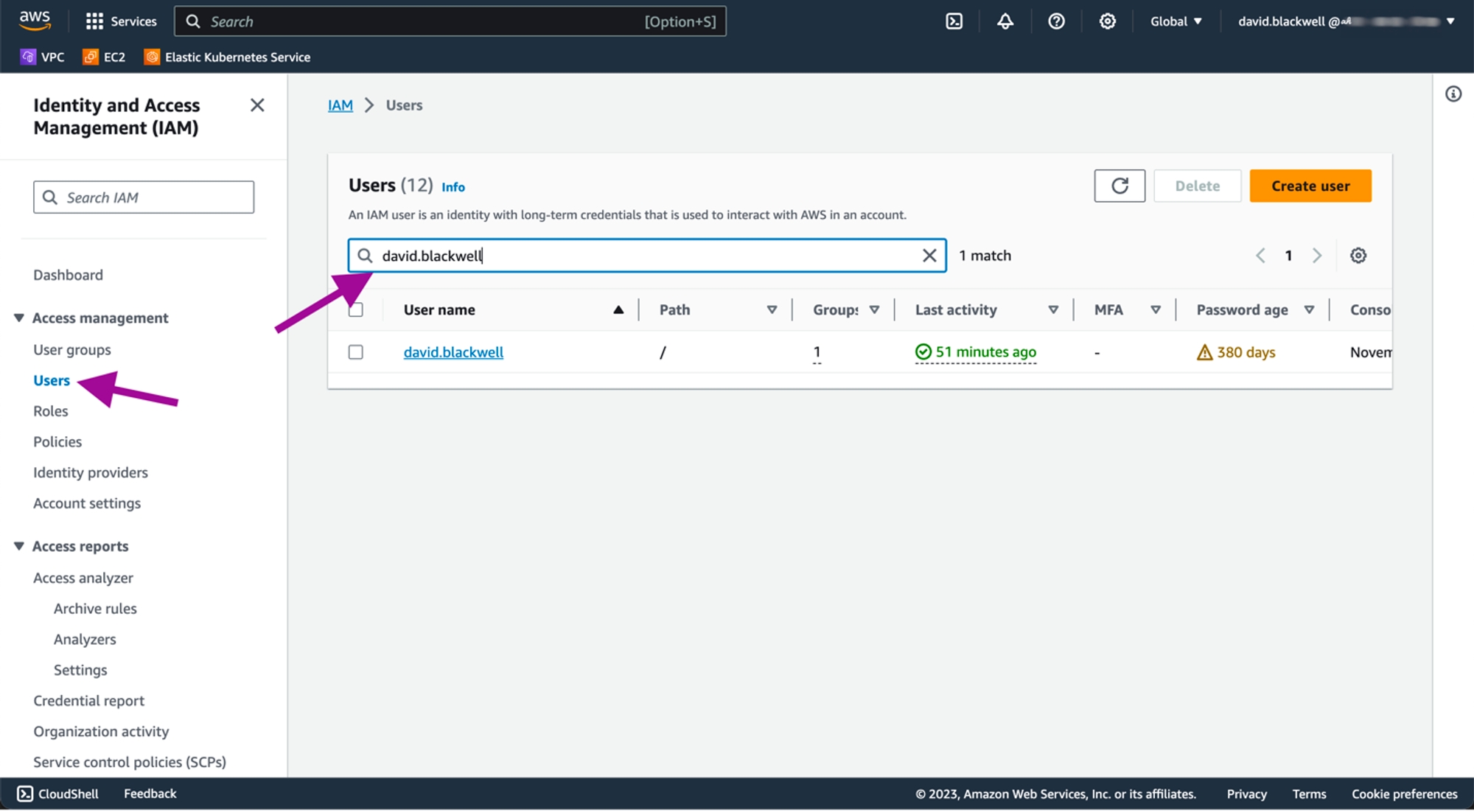

Verify permissions. Click on the user’s name to review their permissions. Ensure they have policies that grant the necessary permissions for managing AWS resources through Terraform.

The user shown in the screenshot above has full administrative access to allow Terraform to deploy WEKA. However, it is recommended to follow the principle of least privilege by granting only the necessary permissions listed in Appendix B: Terraform’s required permissions examples.

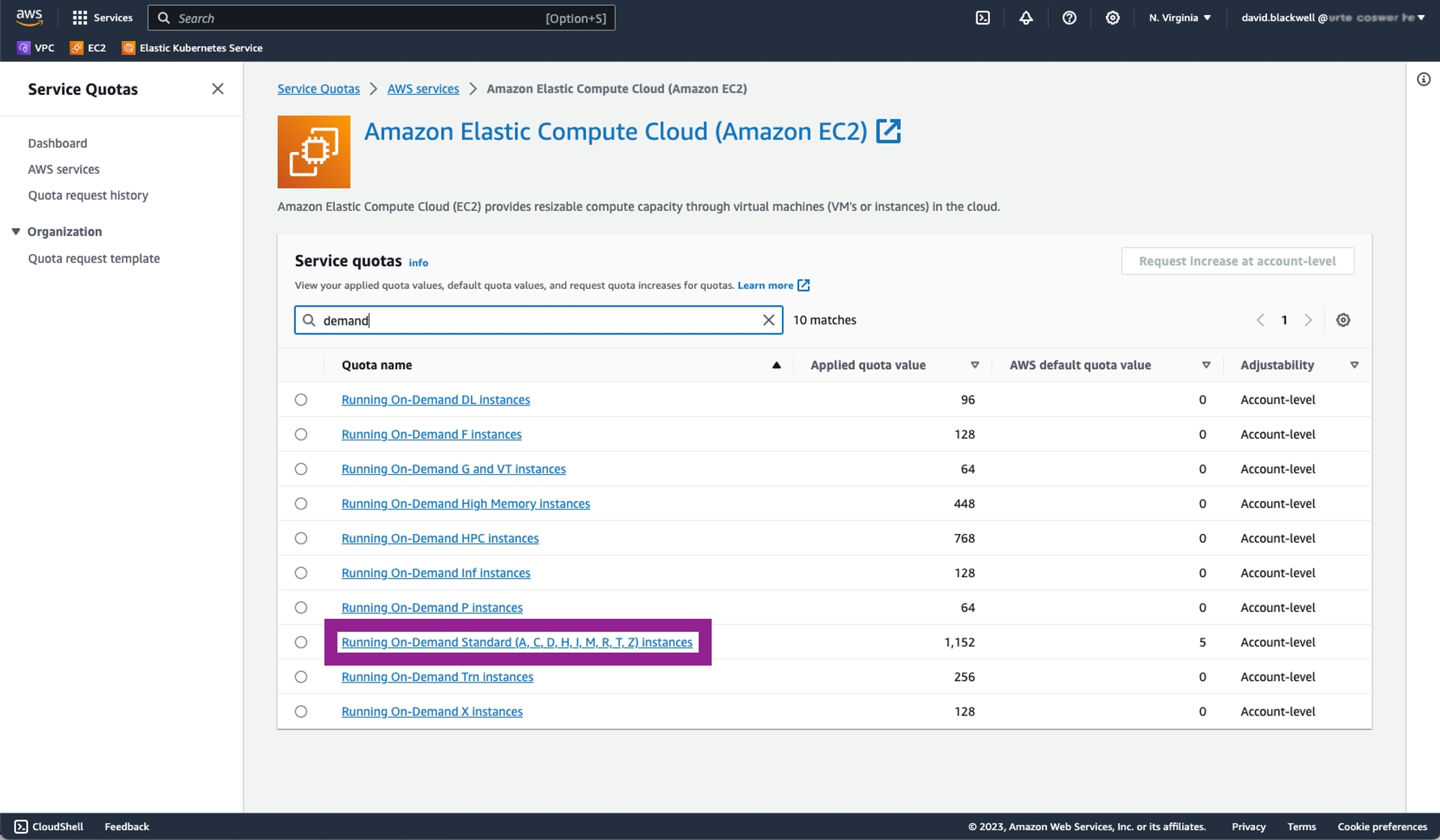

Set AWS Service Quotas

Before deploying WEKA on AWS using Terraform, ensure your AWS account has sufficient quotas for the necessary resources. Specifically, when deploying EC2 instances like the i3en for the WEKA backend cluster, manage quotas based on the vCPU count for each instance type or family.

Requirements:

EC2 Instance vCPU quotas: Verify that your vCPU requirements are within your current quota limits. If not, adjust the quotas before running the Terraform commands (details are provided later in this document).

Cumulative vCPU count: Ensure your quotas cover the total vCPU count needed for all instances. For example, deploying 10 i3en.6xlarge instances (each with 24 vCPUs) requires 240 vCPUs in total. Meeting these quotas is essential to avoid execution failures during the Terraform process, as detailed in the following sections.

Procedure

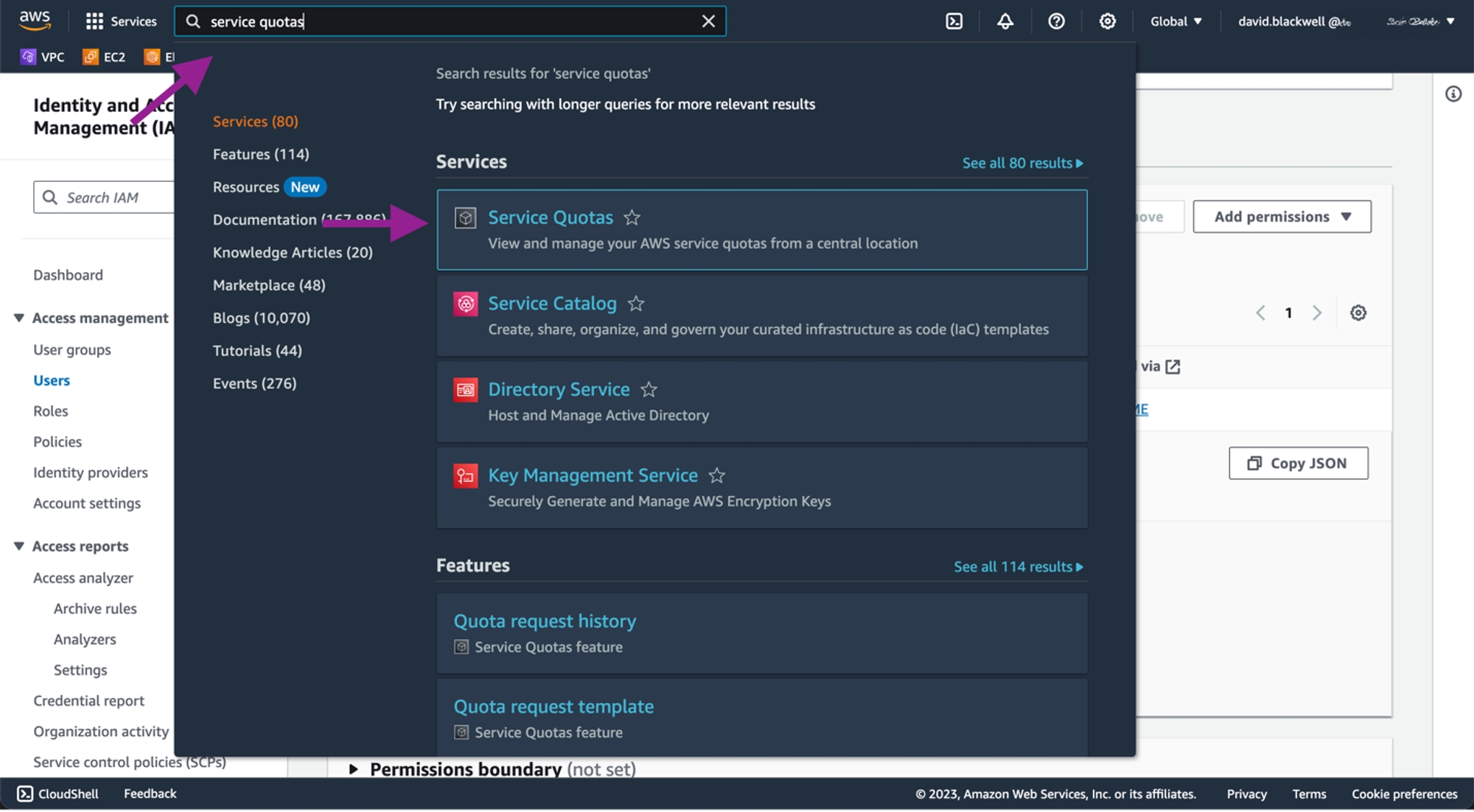

Access Service Quotas: Open the AWS Management Console at AWS Service Quotas. Use the search bar to locate the Service Quotas service.

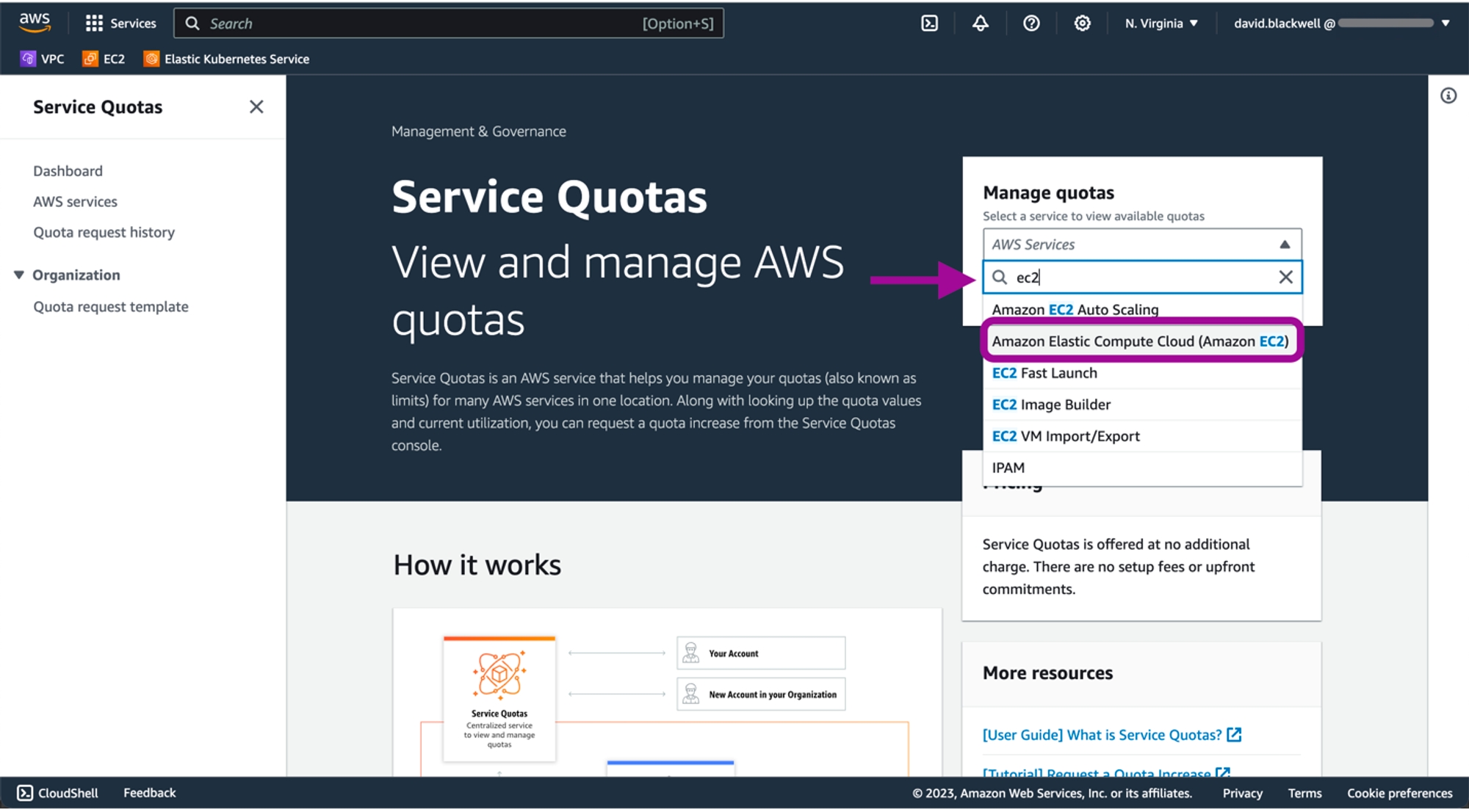

Select Amazon EC2: On the Service Quotas page, select Amazon EC2.

Identify instance type: WEKA supports only i3en instance types for backend cluster nodes. Ensure you adjust the quota for the appropriate instance type (Spot, On-Demand, or Dedicated).

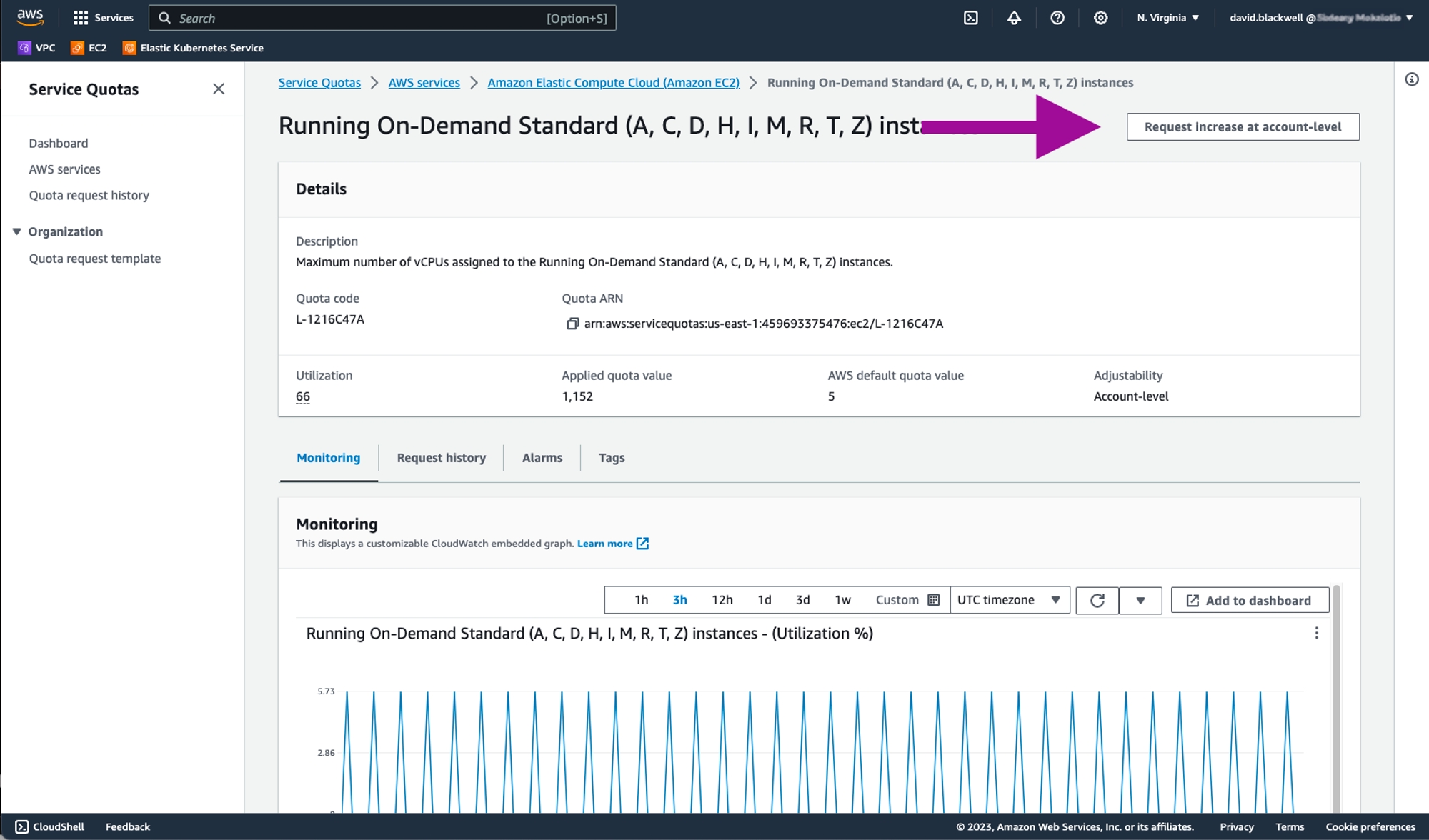

Request quota increase: Choose the relevant instance type from the Standard categories (A, C, D, H, I, M, R, T, Z), then click Request increase at account-level.

Specify number of vCPUs: In the Request quota increase form, specify the number of vCPUs you need. For example, if 150 vCPUs are required for the i3en instance family, enter this number and submit your request.

Quota increase requests are typically processed immediately. However, requests for a large number of vCPUs or specialized instance types may require manual review by AWS support. Confirm that you have requested and obtained the necessary quotas for all instance types used for WEKA backend servers and any associated application servers running WEKA client software. WEKA supports i3en series instances for backend servers.

Related topic

Supported EC2 instance types using Terraform

AWS resource prerequisites

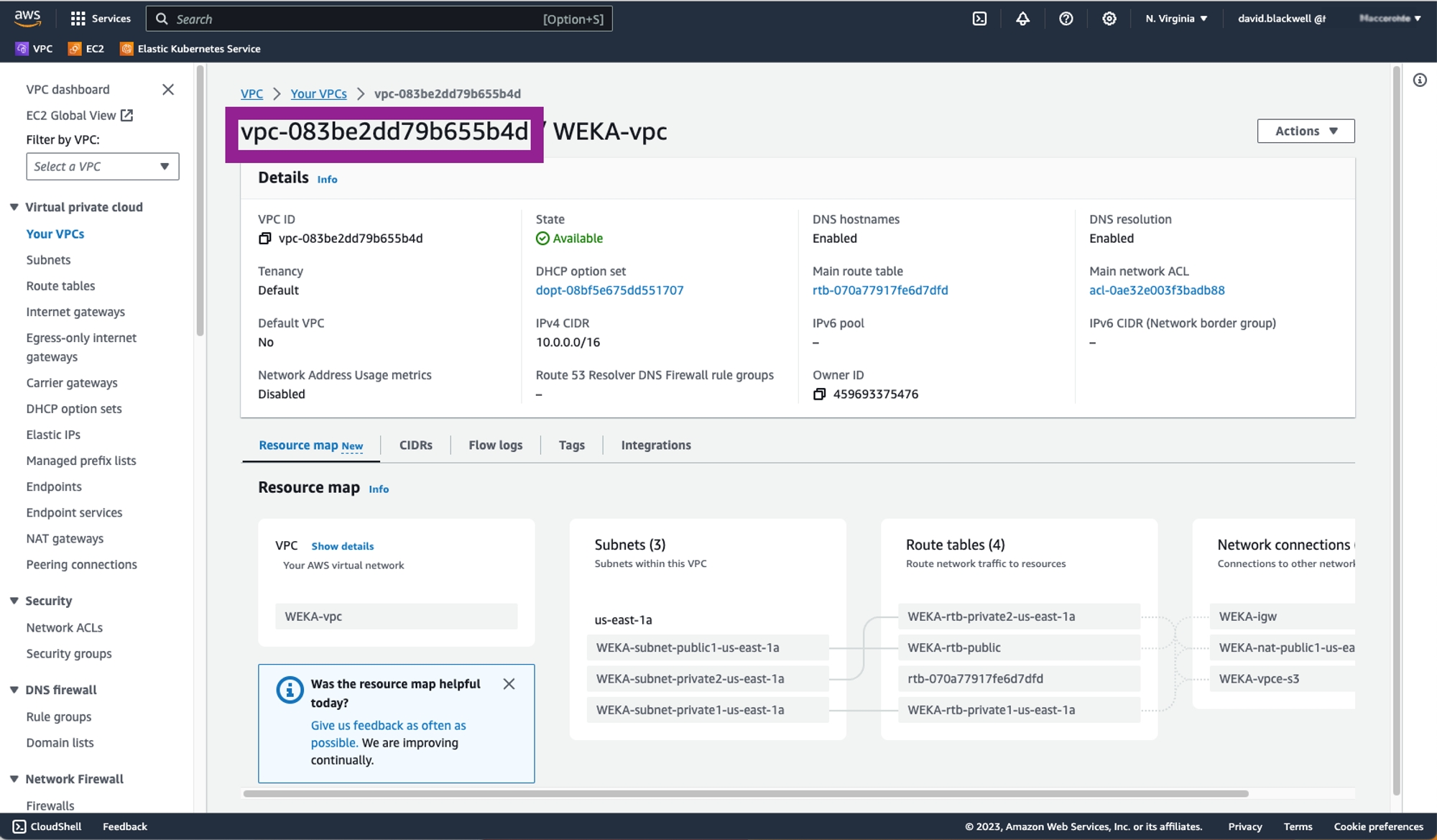

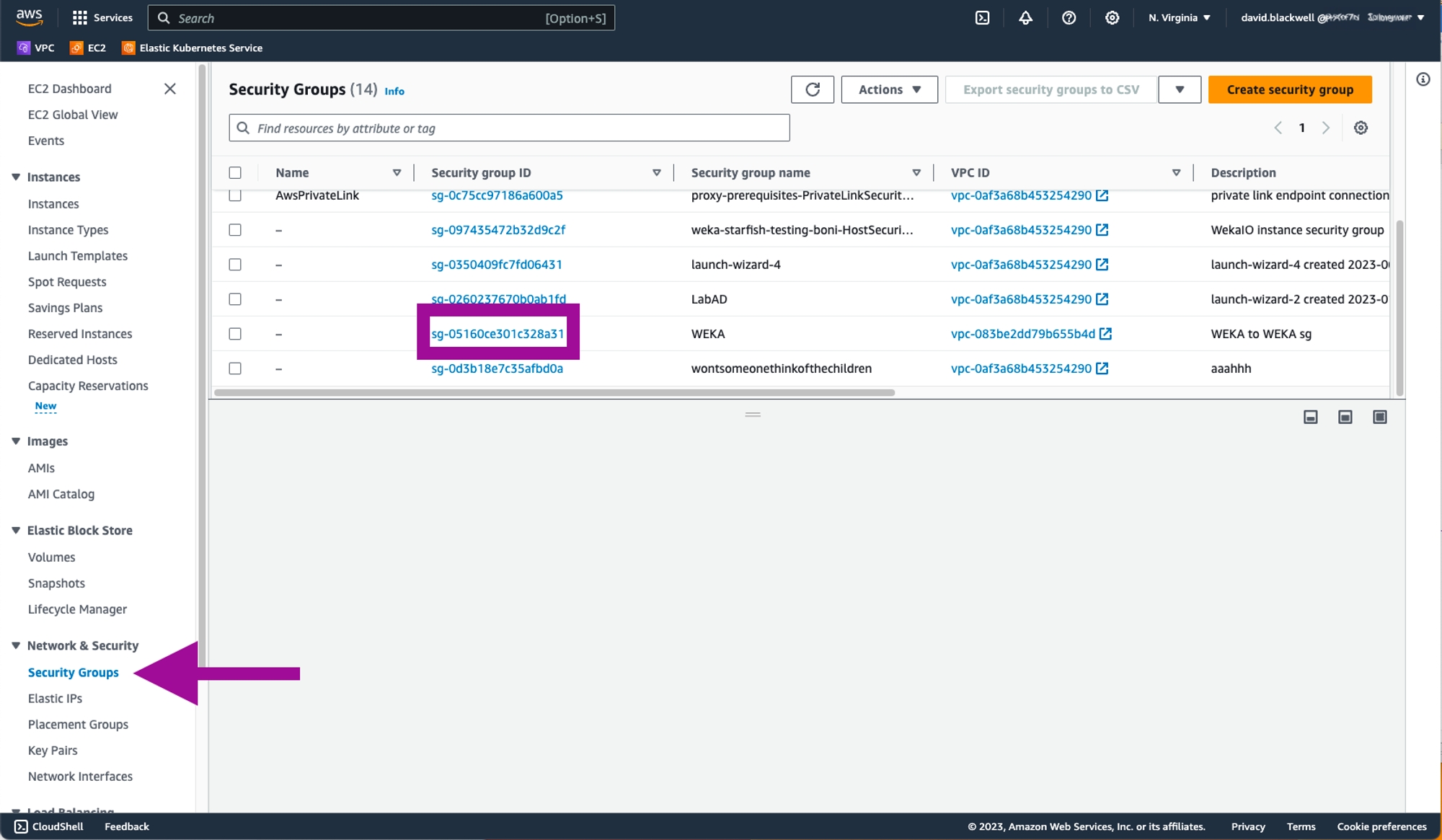

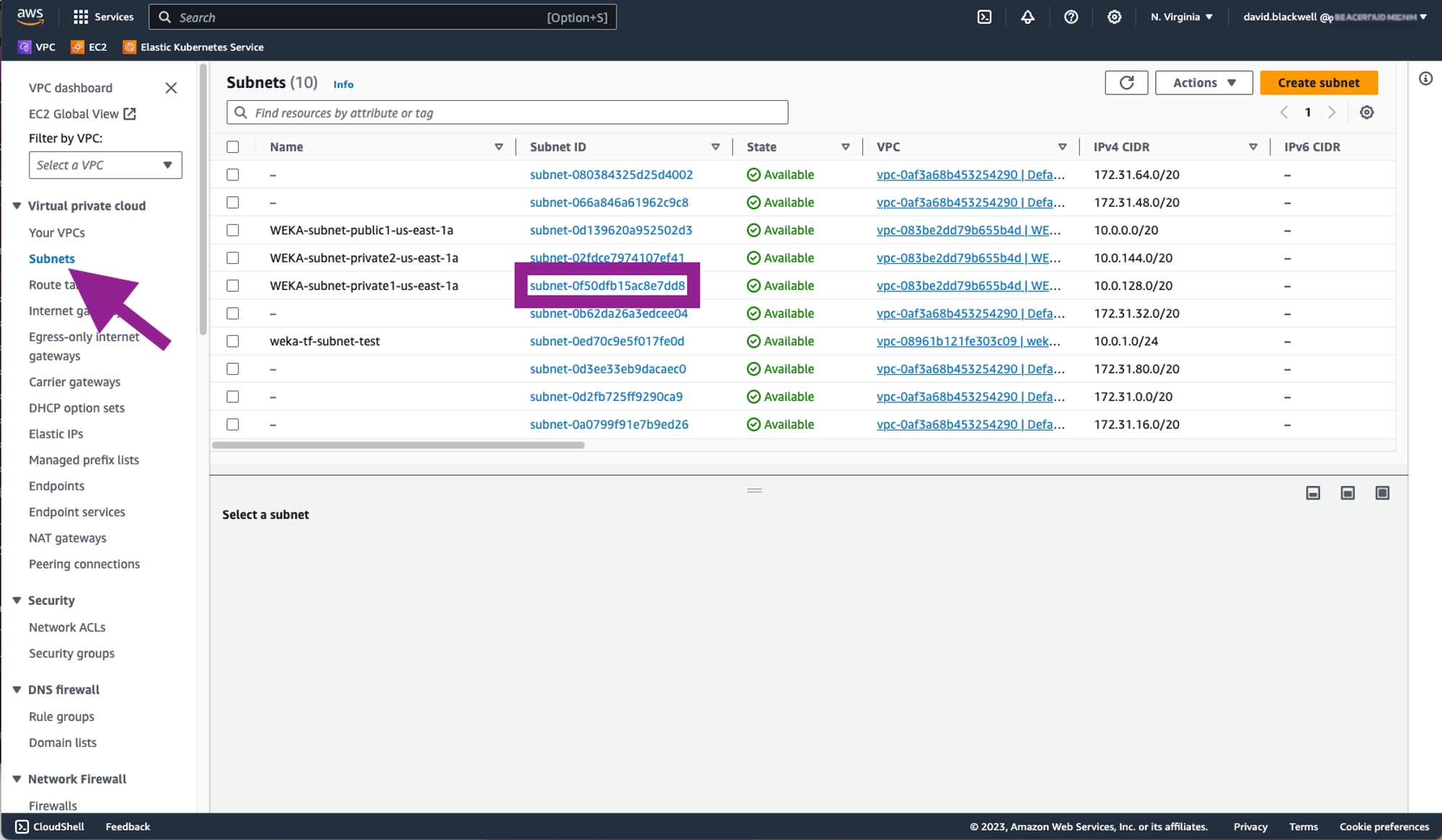

The WEKA deployment requires several AWS components, including VPCs, Subnets, Security Groups, and Endpoints. These components can either be created during the Terraform process or be pre-existing if manually configured.

Minimum requirements:

A Virtual Private Cloud (VPC)

Two Subnets in different Availability Zones (AZs)

A Security Group

Networking requirements

If you choose not to have Terraform auto-create networking components, ensure your VPC configuration includes:

Two subnets (either private or public) in separate AZs.

A subnet that allows WEKA to access the internet, either through an Internet Gateway (IGW) with an Elastic IP (EIP), NAT gateway, proxy, or egress VPC.

Although the WEKA deployment is not multi-AZ, a minimum of two subnets in different AZs is required for the Application Load Balancer (ALB).

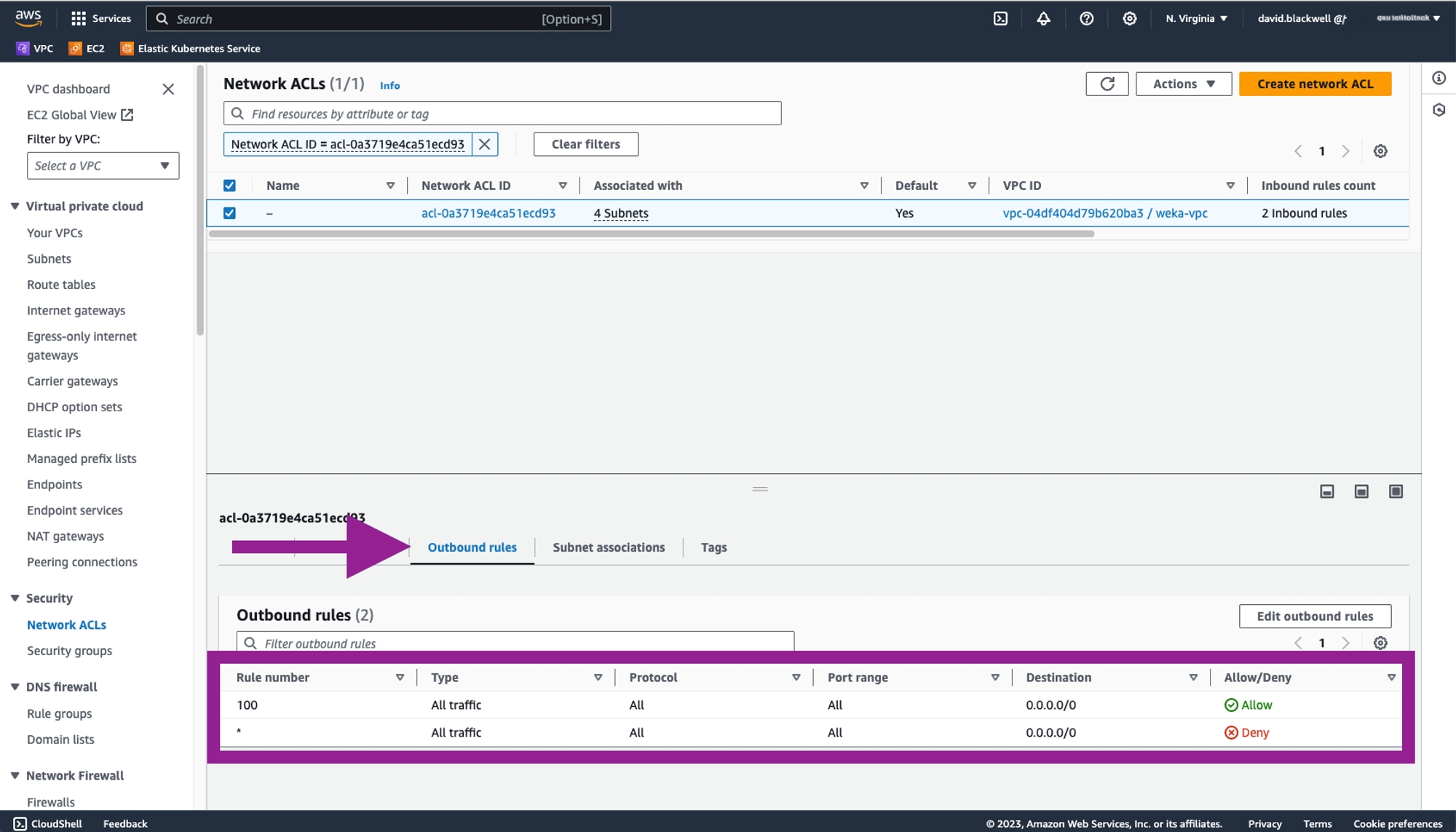

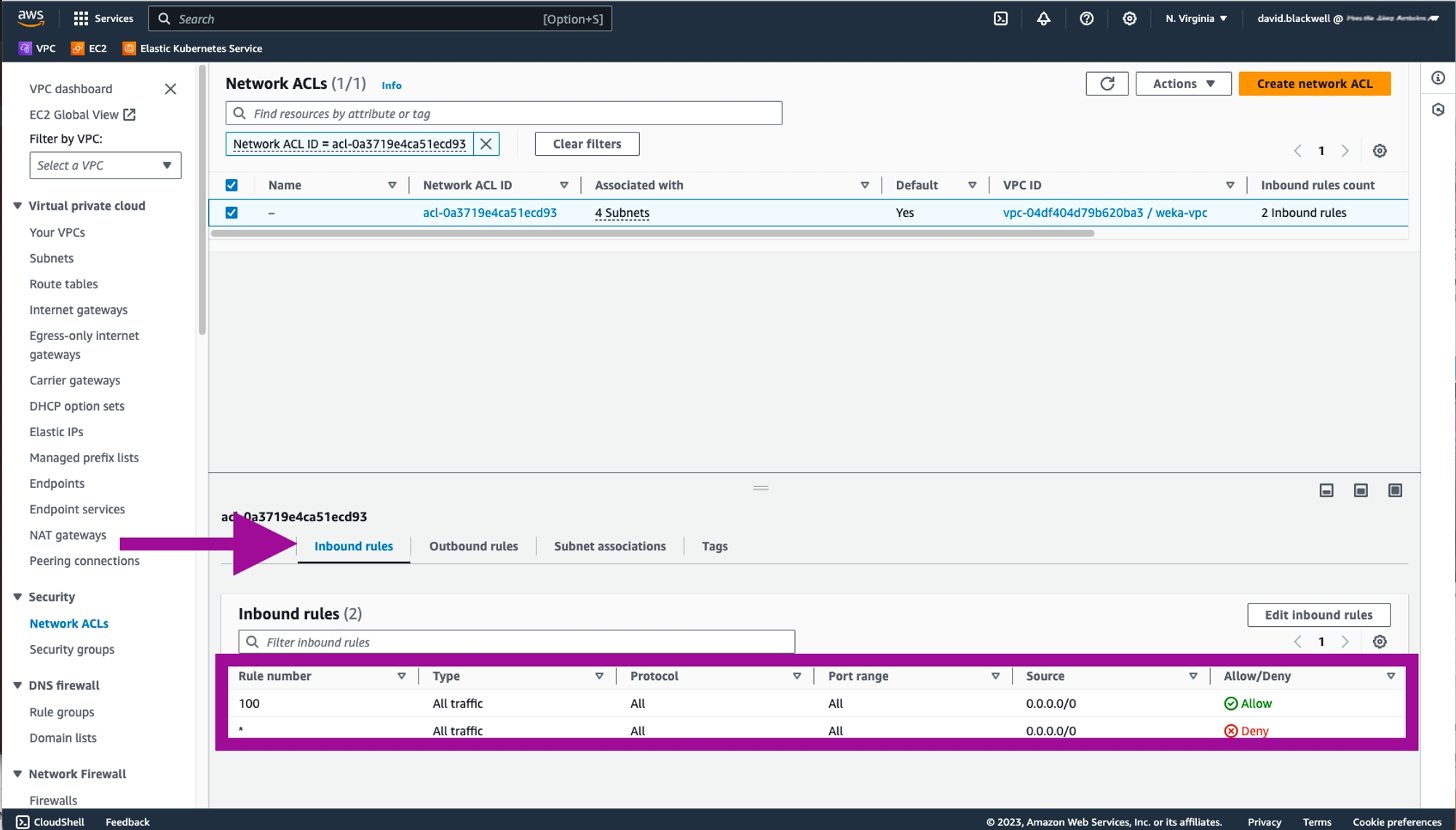

View AWS Network Access Control Lists (ACLs)

AWS Network Access Control Lists (ACLs) enable basic firewalls that control inbound and outbound network traffic based on security rules. They apply to network interfaces (NICs), EC2 instances, and subnets.

By default, ACLs include rules that ensure basic connectivity, such as allowing outbound communication from all AWS resources and denying all inbound traffic from the internet. These default rules have high priority numbers, so custom rules can override them. The security groups created by main.tf handle most traffic restrictions and allowances.

Procedure

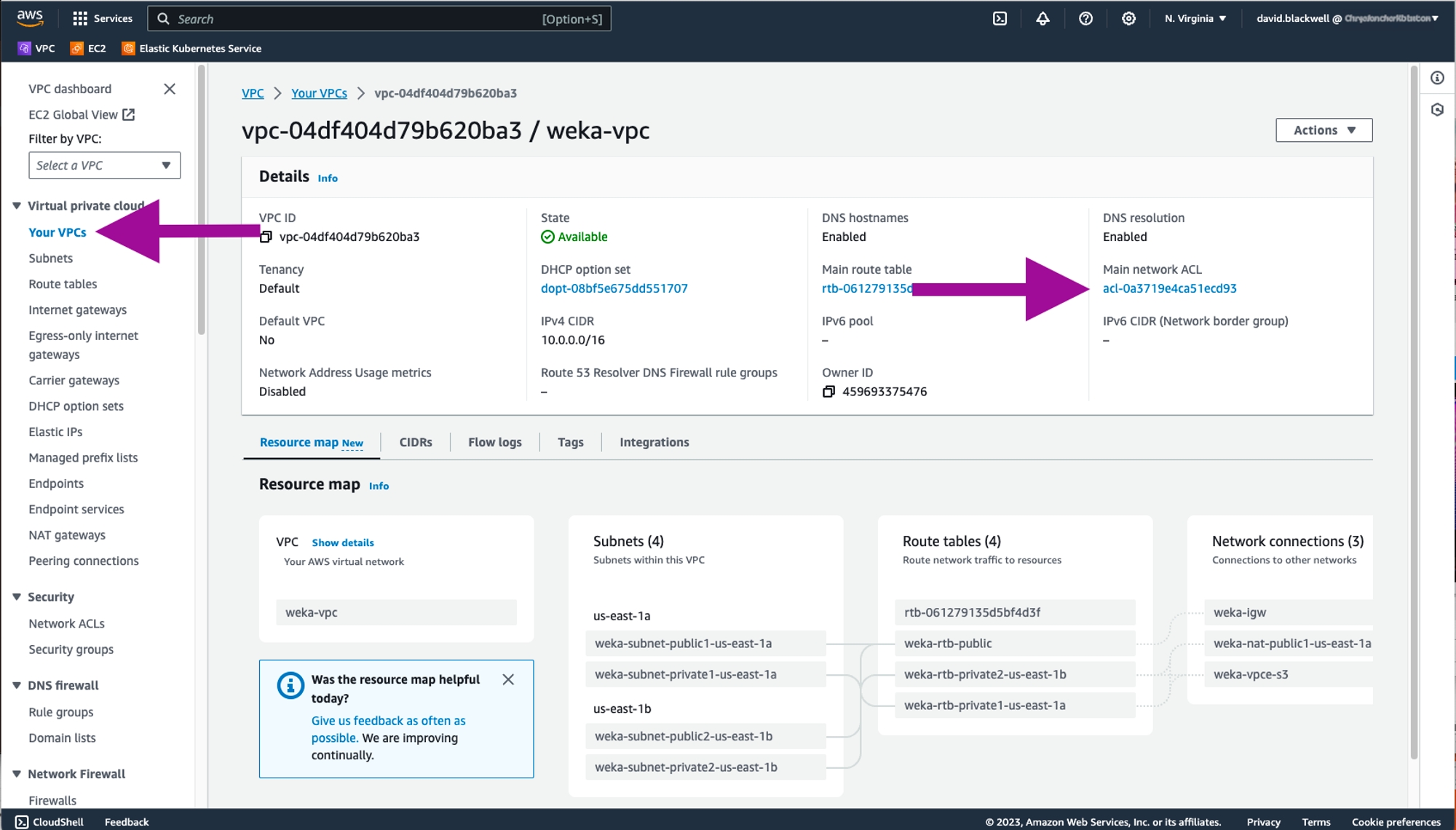

Go to the VPC details page and select Main network ACL.

From the Network ACLs page, select the Inbound rules and Outbound rules.

Related topic

Appendix A: Security Groups / network ACL ports (ensure you have defined all the relevant ports before manually creating ACLs ).

Deploy WEKA on AWS using Terraform

If using existing resources, collect their AWS IDs as shown in the following examples:

Modules overview

This section covers modules for creating IAM roles, networks, and security groups necessary for WEKA deployment. If you do not provide specific IDs for security groups or subnets, the modules automatically create them.

Network configuration

Availability zones: The

availability_zonesvariable is required when creating a network and is currently limited to a single subnet. If no specific subnet is provided, it is automatically created.Private network deployment: To deploy a private network with NAT, set the

subnet_autocreate_as_privatevariable totrueand provide a private CIDR range. To prevent instances from receiving public IPs, setassign_public_iptofalse.

SSH access

For SSH access, use the username ec2-user. You can either:

Provide an existing key pair name, or

Provide a public SSH key.

If neither is provided, the system creates a key pair and store the private key locally.

Application Load Balancer (ALB)

To create an ALB for backend UI and WEKA client connections:

Set

create_albtotrue.Provide additional required variables.

To integrate ALB DNS with your DNS zone, provide variables for the Route 53 zone ID and alias name.

Object Storage (OBS)

For S3 integration for tiered storage:

Set

tiering_enable_obs_integrationtotrue.Provide the name of the S3 bucket.

Optionally, specify the SSD cache percentage.

Optional configurations

Clients: For automatically mounting clients to the WEKA cluster, specify the number of clients to create. Optional variables include instance type, number of network interfaces (NICs), and AMI ID.

NFS protocol gateways: Specify the number of NFS protocol gateways required. Additional configurations include instance type and disk size.

SMB protocol gateways: Create at least three SMB protocol gateways. Configuration options are similar to NFS gateways.

Secret Manager

Use the Secret Manager to store sensitive information, such as usernames and passwords. If you do not provide a secret manager endpoint, disable it by setting secretmanager_use_vpc_endpoint to false.

VPC Endpoints

Enable VPC endpoints for services like EC2, S3, or a proxy by setting the respective variables to true.

Terraform output

The Terraform module output includes:

SSH username.

WEKA password secret ID.

Helper commands for learning about the clusterization process.

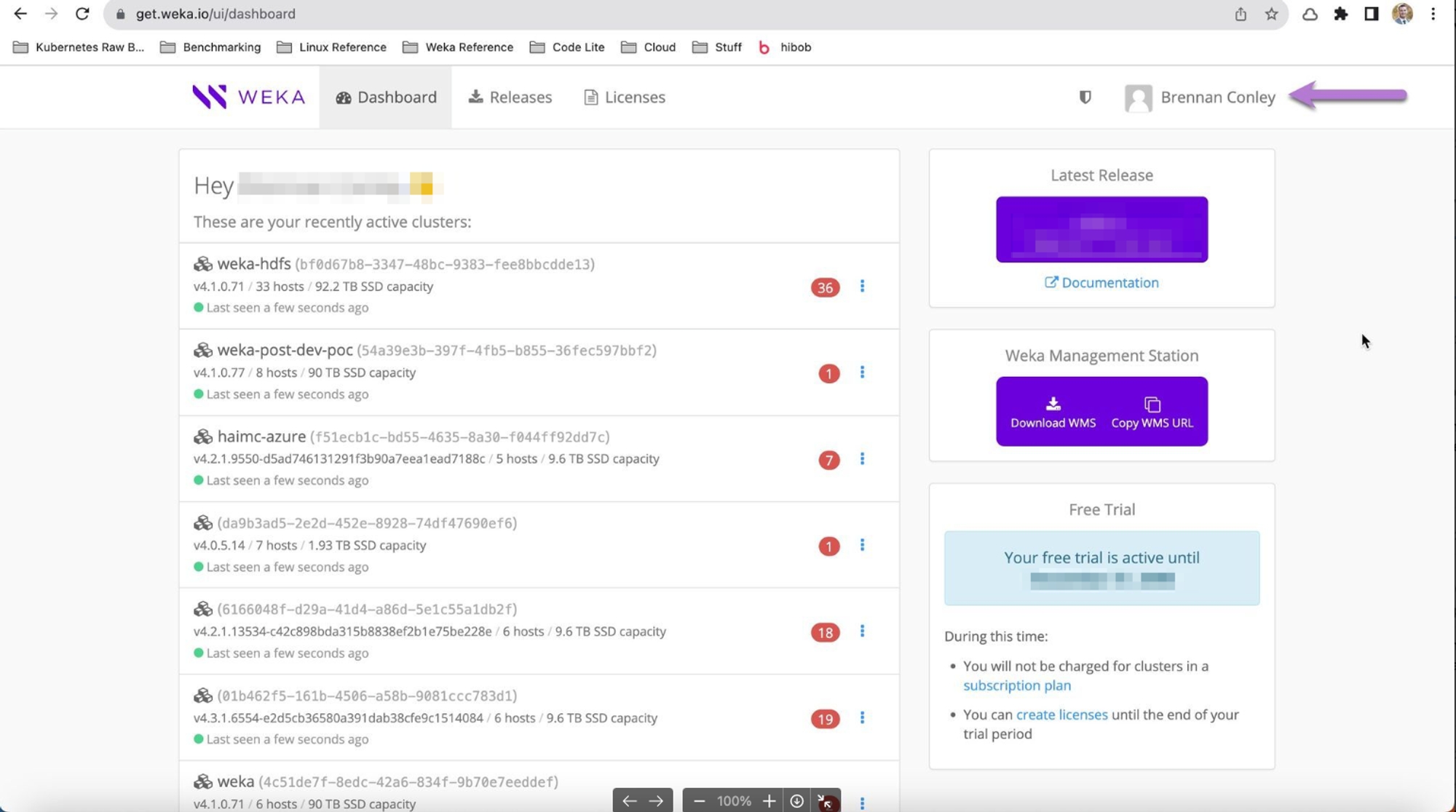

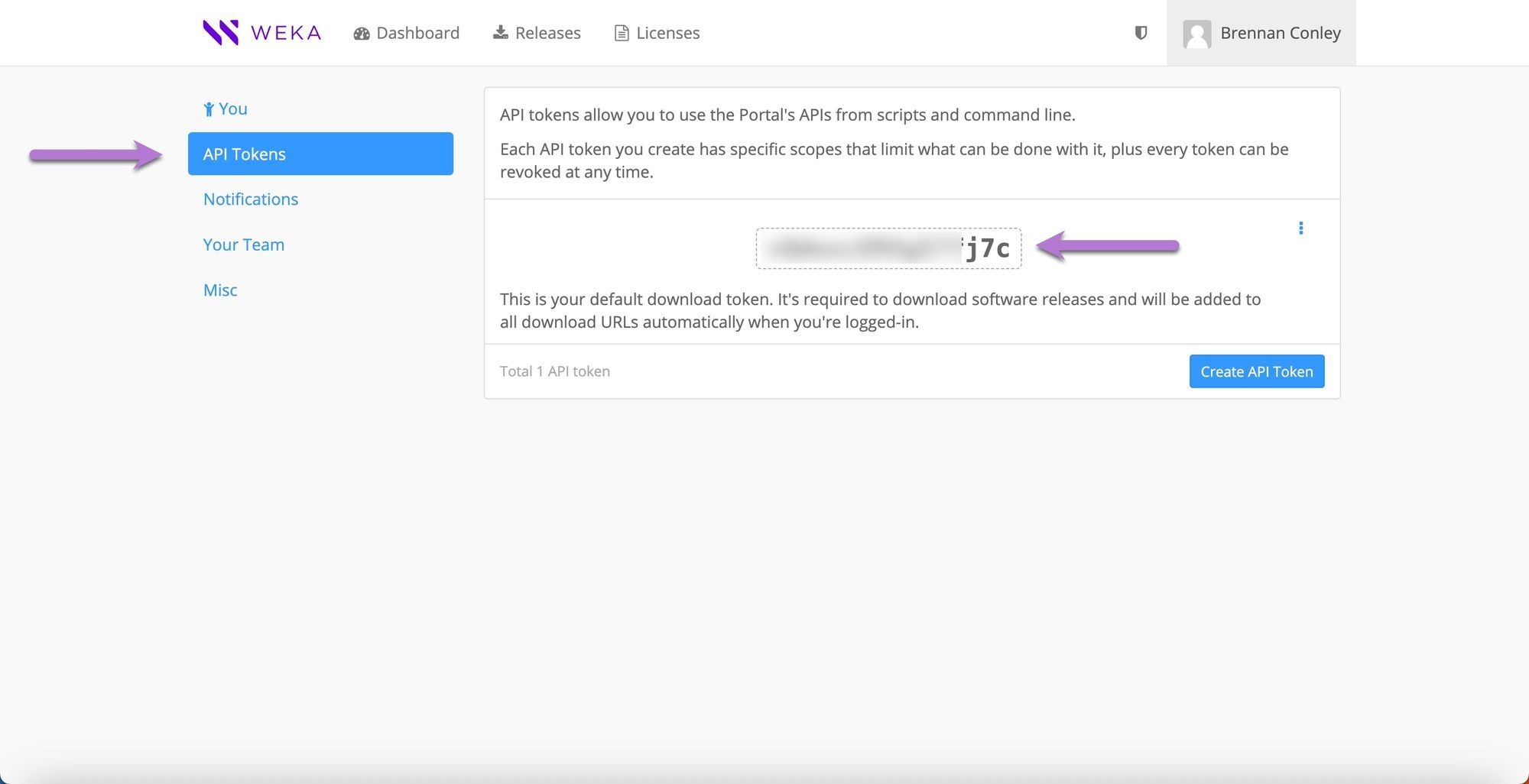

Locate the user’s token on get.weka.io

The WEKA user token grants access to WEKA binaries and is required for accessing https://get.weka.io during installation.

Procedure

Open a web browser and navigate to get.weka.io.

In the upper right-hand corner, click the user’s name.

From the left-hand menu, select API Tokens.

The user’s API token displays on the screen. Use this token later in the installation process.

Deploy WEKA in AWS with Terraform

The Terraform module facilitates the deployment of various AWS resources, including EC2 instances, DynamoDB tables, Lambda functions, and State Machines, to support WEKA deployment.

Procedure

Create a directory for the Terraform configuration files.

All Terraform deployments must be separated into their own directories to manage state information effectively. By creating a specific directory for this deployment, you can duplicate these instructions for future deployments by naming the directory uniquely, such as deploy1.

Navigate to the directory.

Create the

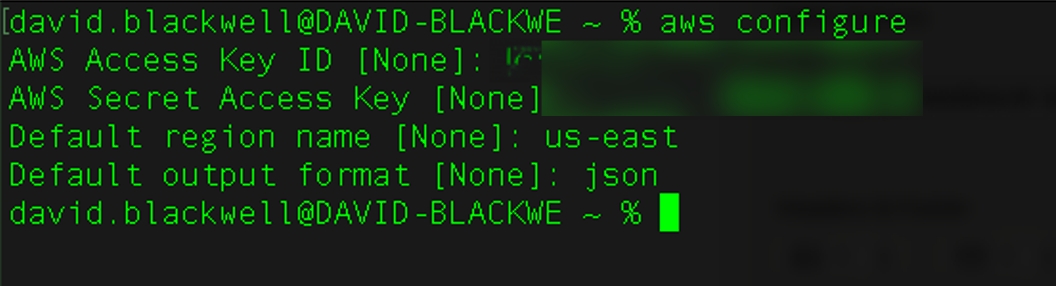

main.tffile. Themain.tffile defines the Terraform options. Create this file using the WEKA Cloud Deployment Manager (CDM). See WEKA Cloud Deployment Manager Web (CDM Web) User Guide for assistance.Authenticate the AWS CLI.

Fill in the required information and press Enter.

After creating and saving the

main.tffile, initialize the Terraform directory to ensure the proper AWS resource files are available.

As a best practice, run the terraform plan to preview the changes.

Execute the creation of AWS resources necessary to run WEKA.

When prompt, type

yesto confirm the deployment.

Deployment output

Upon successful completion, Terraform displays output similar to the following. If the deployment fails, an error message appears.

Take note of the

alb_dns_name,local_ssh_private_key, andssh_uservalues. You need these details for SSH access to the deployed instances. The output includes acluster_helper_commandssection, offering three AWS CLI commands to retrieve essential information.

Core resources created:

Database (DynamoDB): Stores the state of the WEKA cluster.

EC2: Launch templates for auto-scaling groups and individual instances.

Networking: Includes a Placement Group, Auto Scaling Group, and an optional ALB for the UI and backends.

CloudWatch: Triggers the state machine every minute.

IAM: Roles and policies for various WEKA components.

Secret Manager: Securely stores WEKA credentials and tokens.

Lambda functions created:

deploy: Provides installation scripts for new machines.

clusterize: Executes the script for cluster formation.

clusterize-finalization: Updates the cluster state after cluster formation is completed.

report: Reports the progress of cluster formation and machine installation.

status: Displays the current status of cluster formation.

State machine functions:

fetch: Retrieves cluster or autoscaling group details and passes them to the next stage.

scale-down: Uses the retrieved information to manage the WEKA cluster, including deactivating drives or hosts. An error is triggered if an unsupported target, like scaling down to two backend instances, is provided.

terminate: Shuts down deactivated hosts.

transient: Handles and reports transient errors, such as if some hosts couldn't be deactivated, while others were, allowing the operation to continue.

Deploy protocol servers

The Terraform deployment process allows for the easy addition of instances to serve as protocol servers for NFS or SMB. These protocol servers are separate from the instances specified for the WEKA backend cluster.

Procedure

Open the

main.tffile for editing.Add the required configurations to define the number of protocol servers for each type (NFS or SMB). Use the default settings for all other parameters. Insert the configuration lines before the last closing brace (

}) in themain.tffile.Example configurations:

Save and close the file.

Obtain access information about WEKA cluster

Determine the WEKA cluster IP address(es)

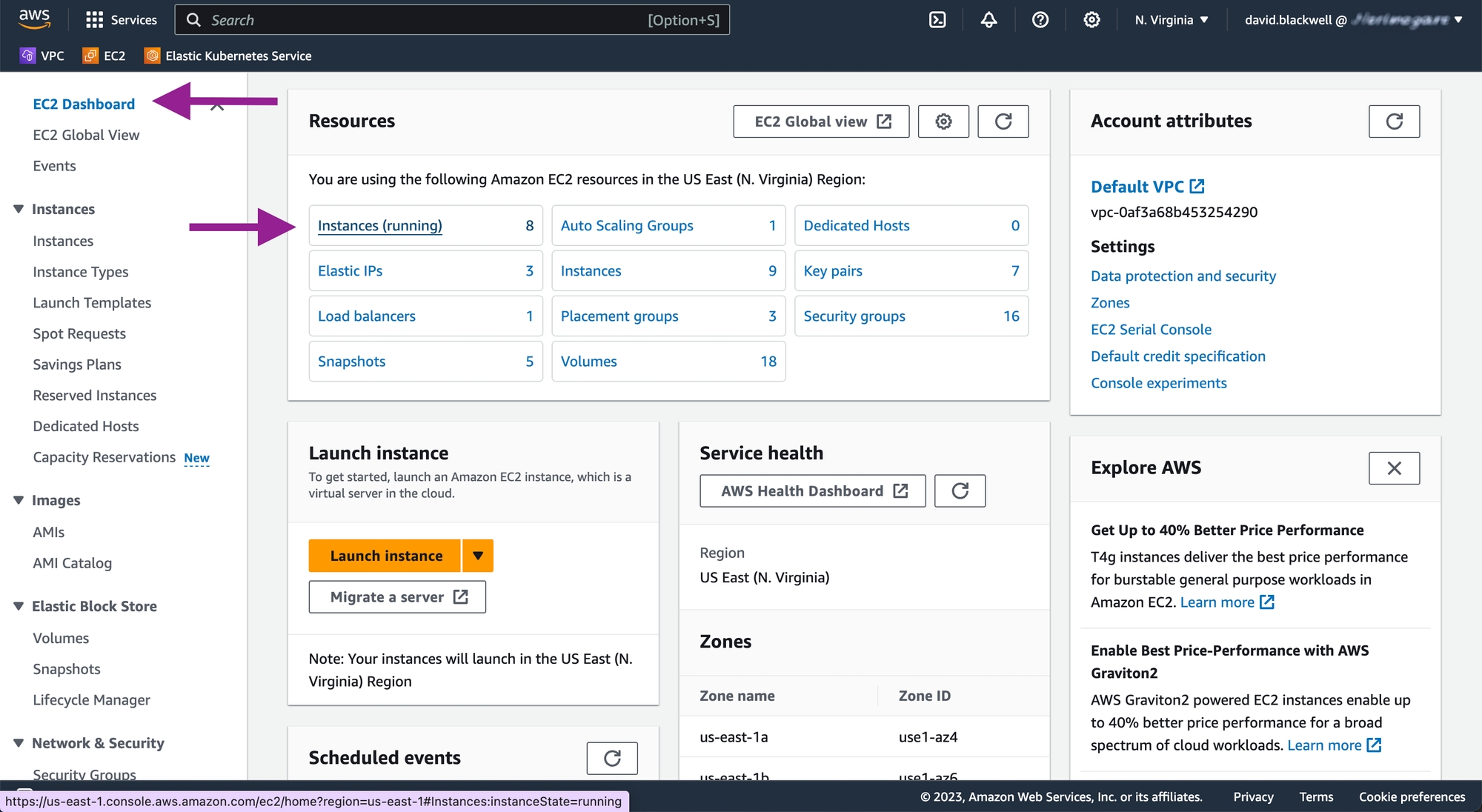

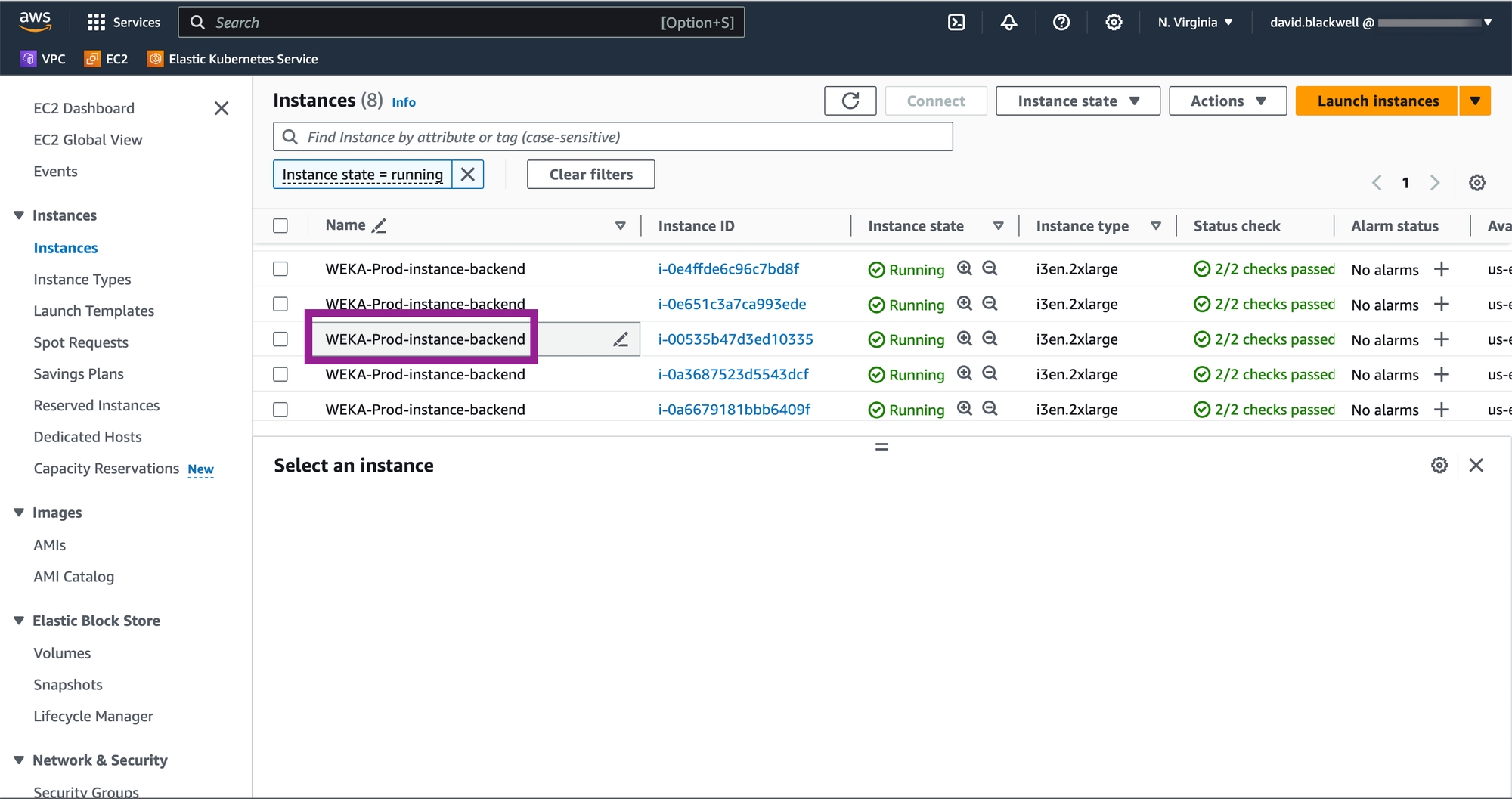

Navigate to the EC2 Dashboard page in AWS and select Instances (running).

Locate the instances for the WEKA backend servers, named

<prefix>-<cluster_name>-instance-backend.

The prefix and cluster_name correspond to the values specified in the main.tf file.

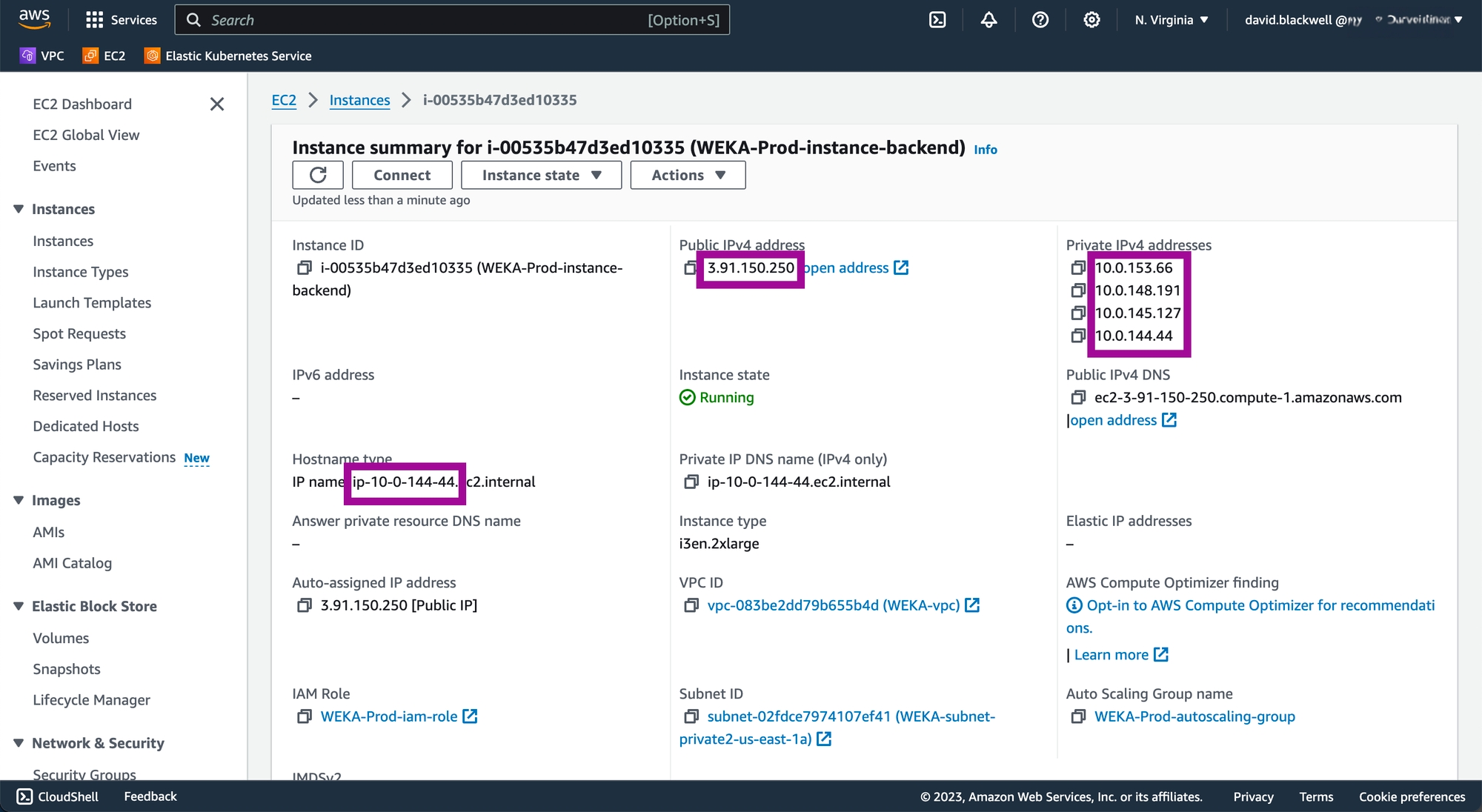

To access and manage the WEKA cluster, select any of the WEKA backend instances and note the IP address.

If your subnet provides a public IP address (configured in EC2), it is listed. WEKA primarily uses private IPv4 addresses for communication. To find the primary private address, check the Hostname type and note the IP address listed.

Obtain WEKA cluster access password

The password for your WEKA cluster is securely stored in AWS Secrets Manager. This password is crucial for managing your WEKA cluster.

You can retrieve the password using one of the following options:

Run the

aws secretsmanager get-secret-valuecommand and include the arguments provided in the Terraform output. See the deployment output above.Use the AWS Management Console. See the following procedure.

Procedure

Navigate to the AWS Management Console.

In the search bar, type Secrets Manager and select it from the search results.

In the Secrets Manager, select Secrets from the left-hand menu.

Find the secret corresponding to your deployment by looking for a name that includes your deployment’s

prefixandcluster_name, along with the word password.Retrieve the password: Click the identified secret to open its details, and select the Retrieve secret value button. The console displays the randomly generated password assigned to the WEKA user

admin. Store it securely and use it according to your organization's security policies.

Access the WEKA cluster backend servers

To manage your WEKA cluster, you need to access the backend servers. This is typically done using SSH from a system that can communicate with the AWS subnet where the instances are located. If the backend instances lack public IP addresses, ensure that you connect from a system within the same network or use a or .

Procedure

Prepare for SSH access:

Identify the IP address of the WEKA backend server (obtained during the Terraform deployment).

Locate the SSH private key file used during the deployment. The key path is provided in the Terraform output.

Connect to the backend server:

If your system is within the AWS network or has direct access to the relevant subnet, proceed with the SSH connection.

If your system is outside the AWS network, set up a Jump Host or Bastion Host that can access the subnet.

Execute the SSH command:

Use the following SSH command to access the backend server:

Replace

/path/to/private-key.pemwith the actual path to your SSH private key file.Replace

<server-ip-address>with the IP address of the WEKA backend server.

WEKA GUI Login and Review

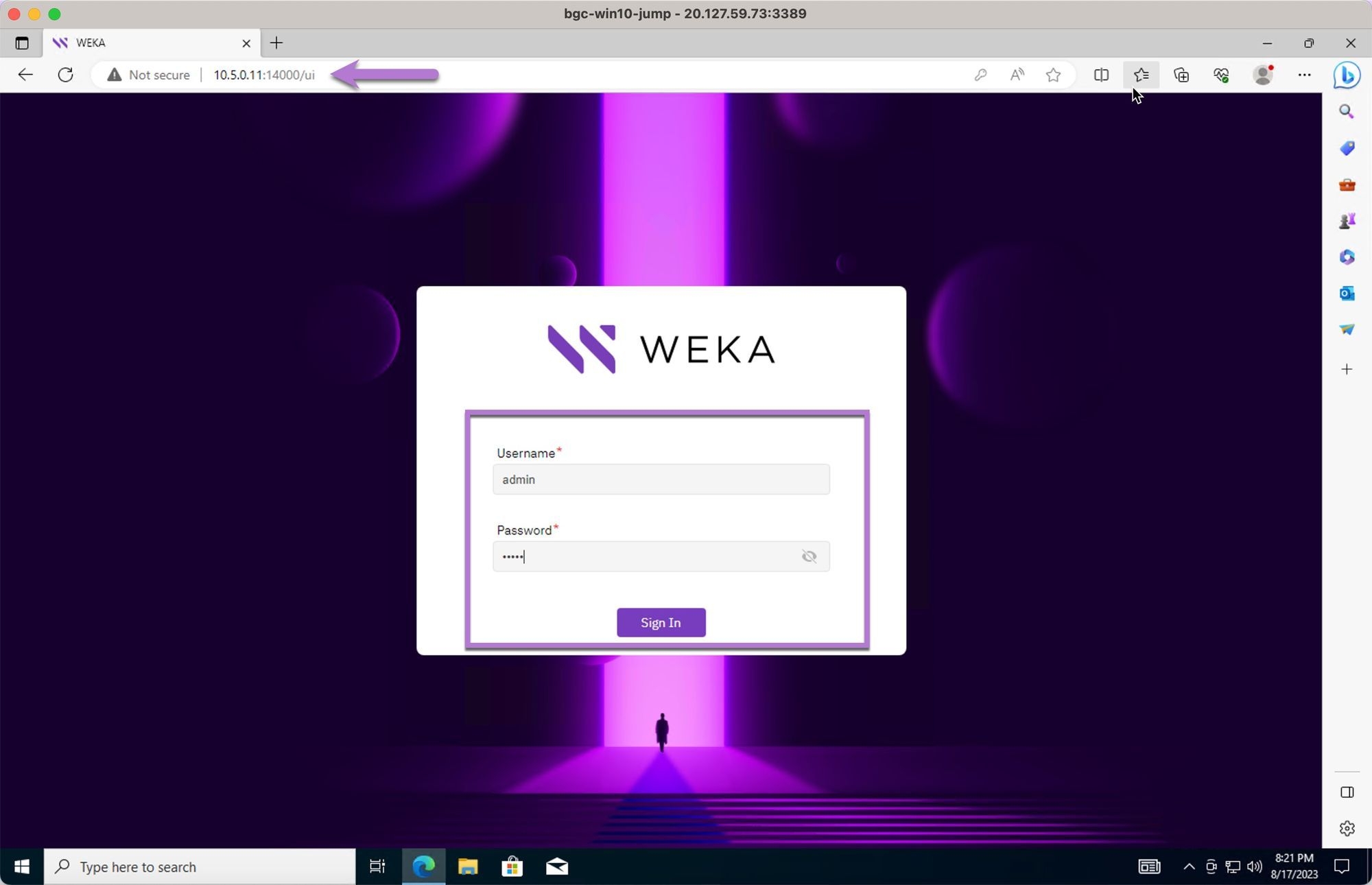

To manage your WEKA cluster through the GUI) you'll need access to a jump box (a system with a GUI) that is deployed in the same VPC and subnet as your WEKA cluster. This allows you to securely access the WEKA GUI through a web browser.

The following procedure provides an example of using a Windows 10 instance.

Procedure

Set up the jump box:

Deploy a Windows 10 instance in the same VPC, subnet, and security group as your WEKA cluster.

Assign a public IP address to the Windows 10 instance.

Modify the network security group rules to allow Remote Desktop Protocol (RDP) access to the Windows 10 system.

Access the WEKA GUI:

Open a web browser on the Windows 10 jump box.

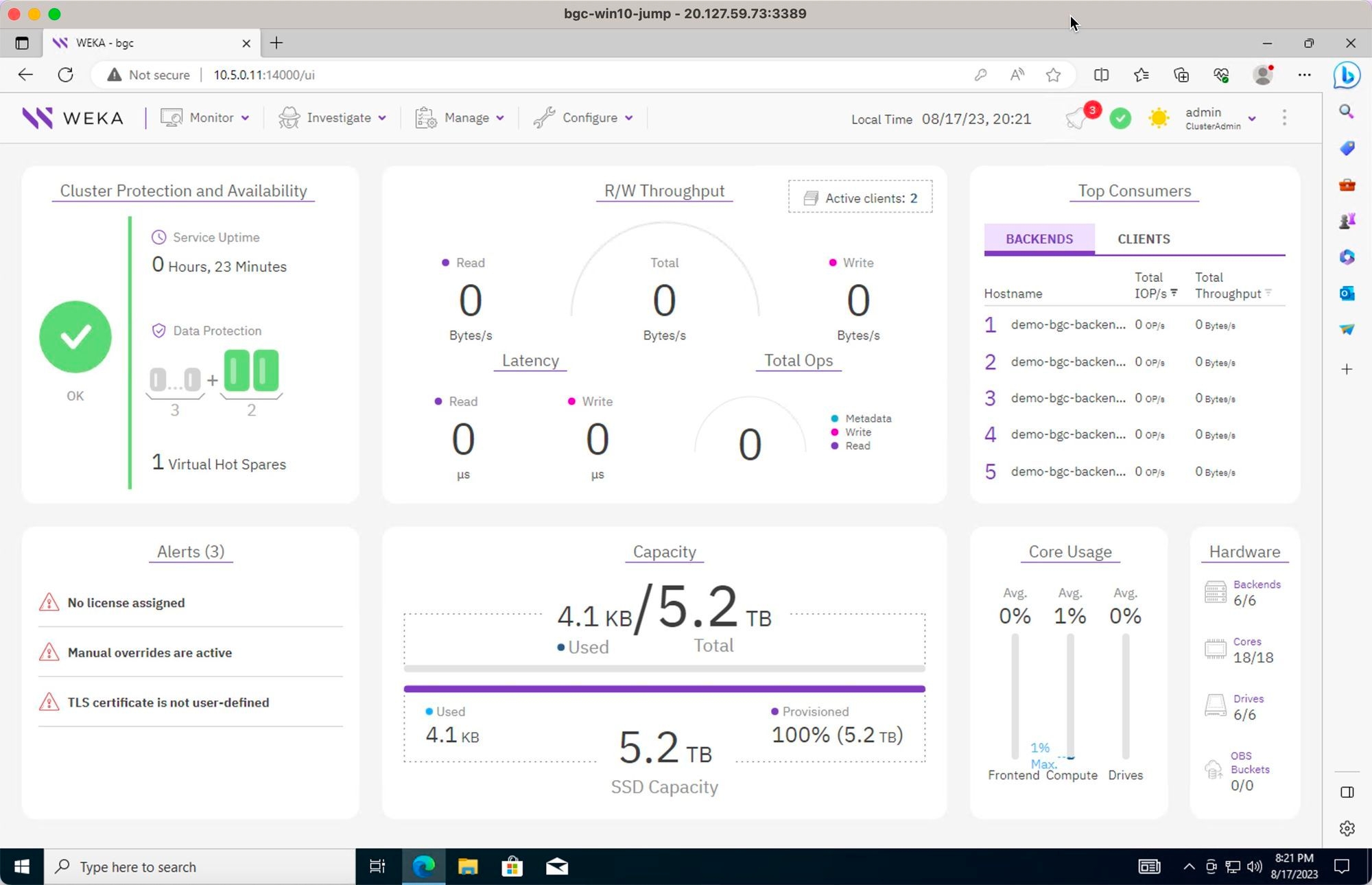

Navigate to the WEKA GUI by entering the following URL:

Replace

<IP>with the IP address of your WEKA cluster. For example:https://10.5.0.11.

The WEKA GUI login screen appears.

Log In to the WEKA GUI:

Log in using the username

adminand the password obtained from AWS Secrets Manager (as described in the earlier steps).

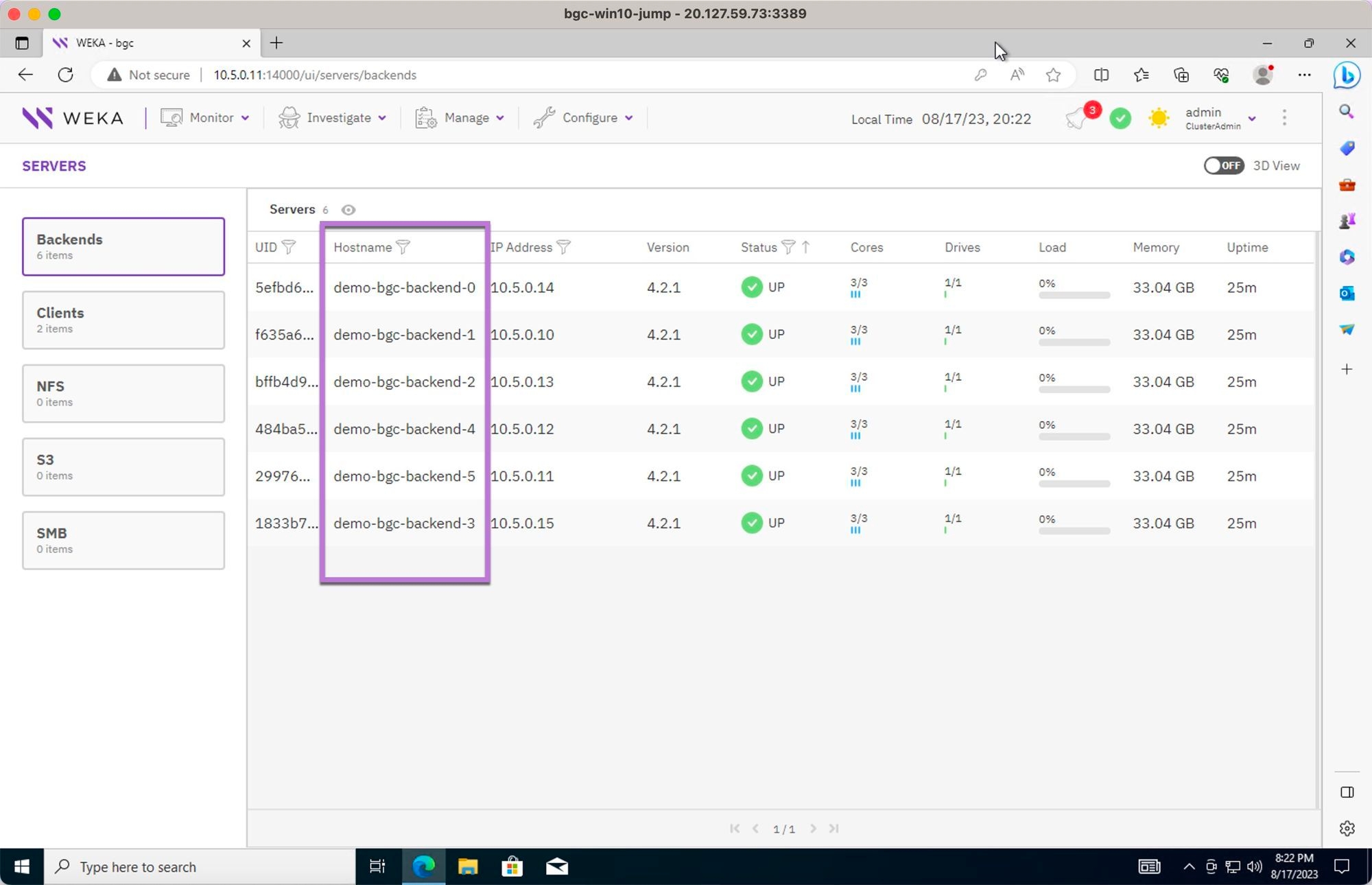

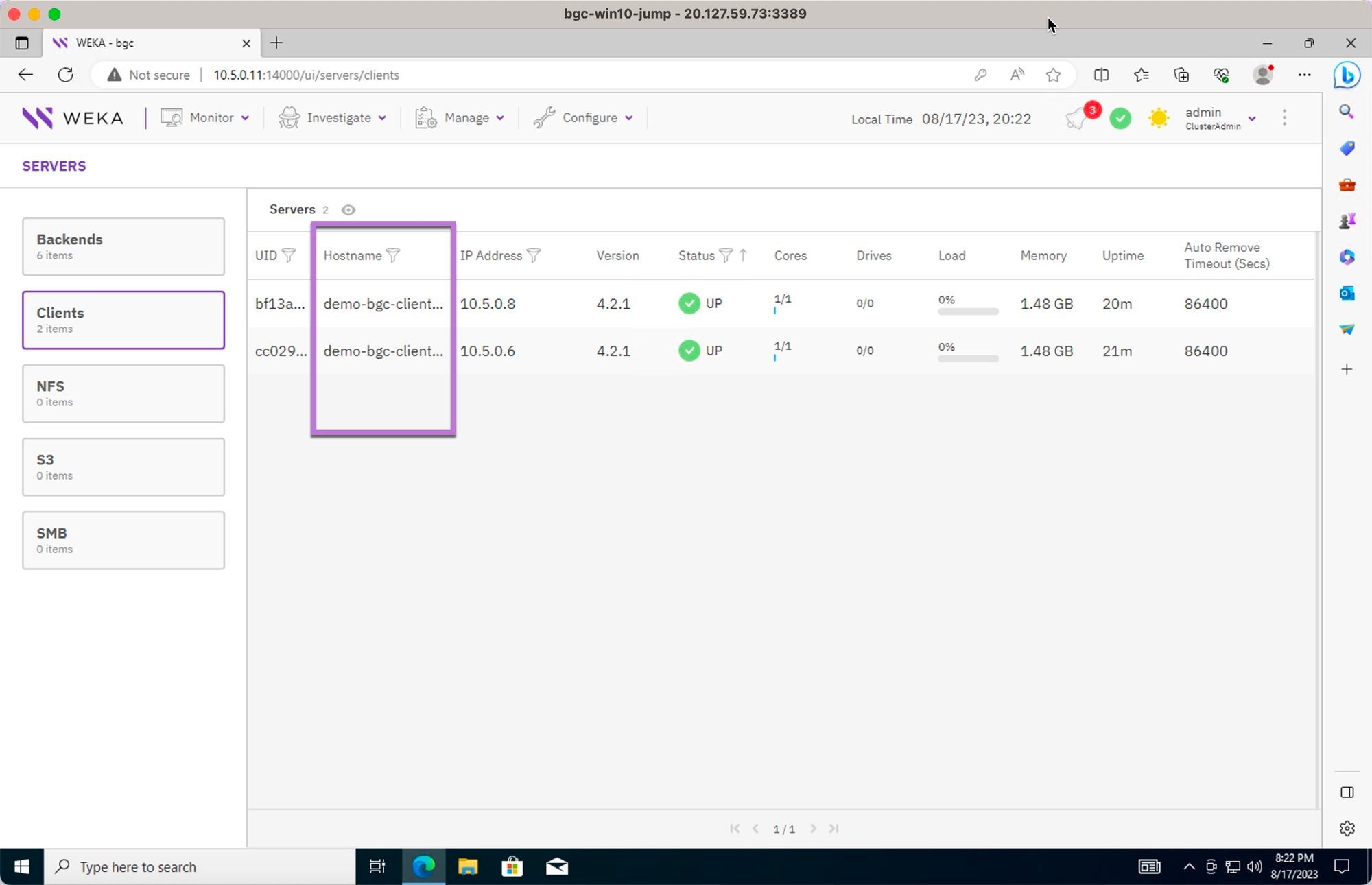

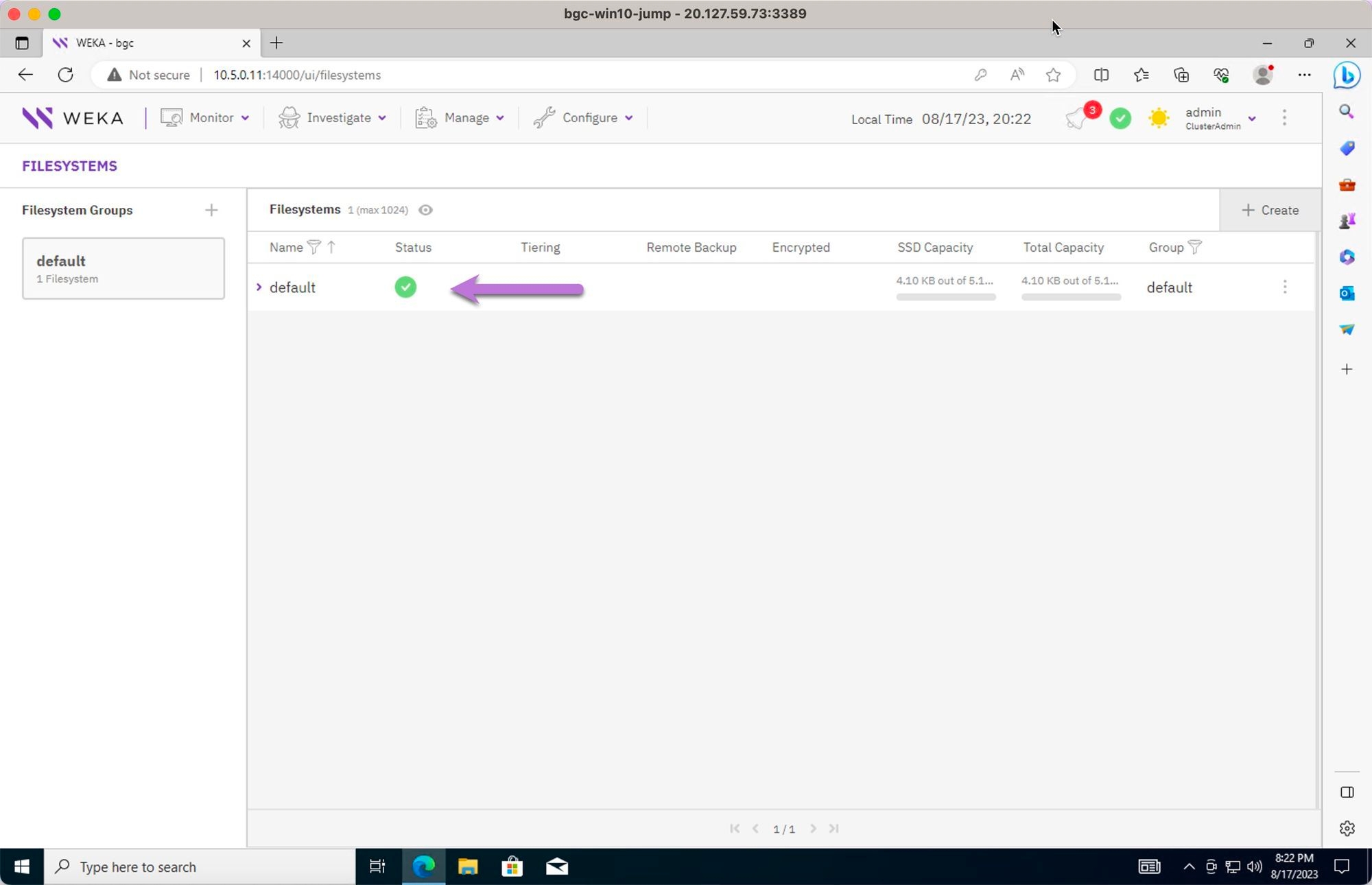

Review the WEKA Cluster:

Cluster home screen: View the cluster home screen for an overview of the system status.

Cluster Backends: Review the status and details of the backend servers within the cluster (the server names may differ from those shown in examples).

Clients: If there are any clients attached to the cluster, review their details and status.

Filesystems: Review the filesystems associated with the cluster for their status and configuration.

Scaling WEKA clusters with automated workflows

Scaling your WEKA cluster, whether scale-out (expanding) or scale-in (contracting), is streamlined using the AWS AutoScaling Group Policy set up by Terraform. This process leverages Terraform-created Lambda functions to manage automation tasks, ensuring that additional computing resources (new backend instances) are efficiently integrated or removed from the cluster as needed.

Advantages of auto-scaling

Integration with ALB:

Traffic distribution: Auto Scaling Groups work seamlessly with an Application Load Balancer (ALB) to distribute traffic efficiently among instances.

Health checks: The ALB directs traffic only to healthy instances, based on results from the associated Auto Scaling Group's health checks.

Auto-healing:

Instance replacement: If an instance fails a health check, auto-scaling automatically replaces it by launching a new instance.

Health verification: The new instance is only added to the ALB’s target group after passing health checks, ensuring continuous availability and responsiveness.

Graceful scaling:

Controlled adjustments: Auto-scaling configurations can be customized to execute scaling actions gradually.

Demand adaptation: This approach prevents sudden traffic spikes and maintains stability while adapting to changing demand.

Procedure

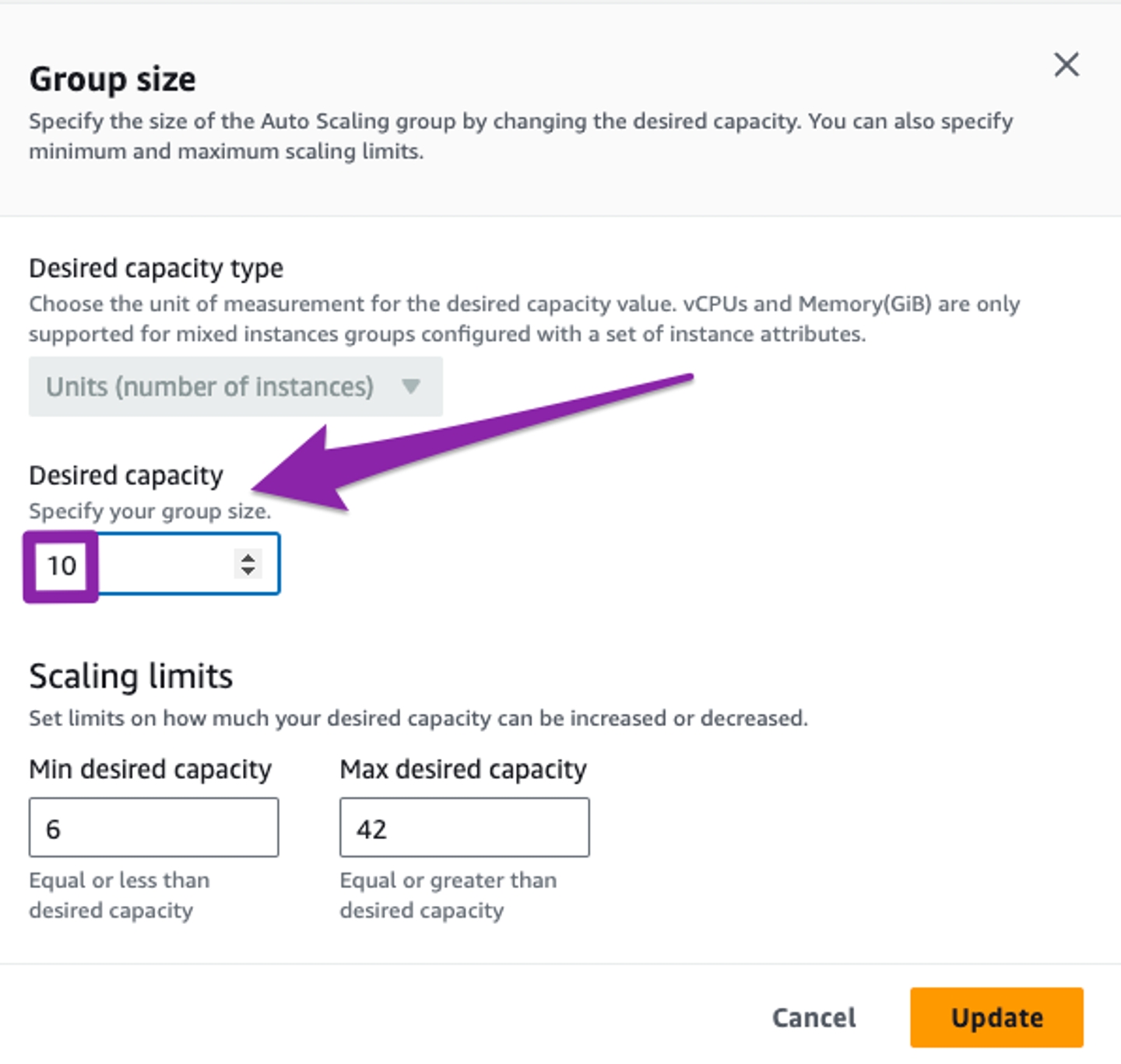

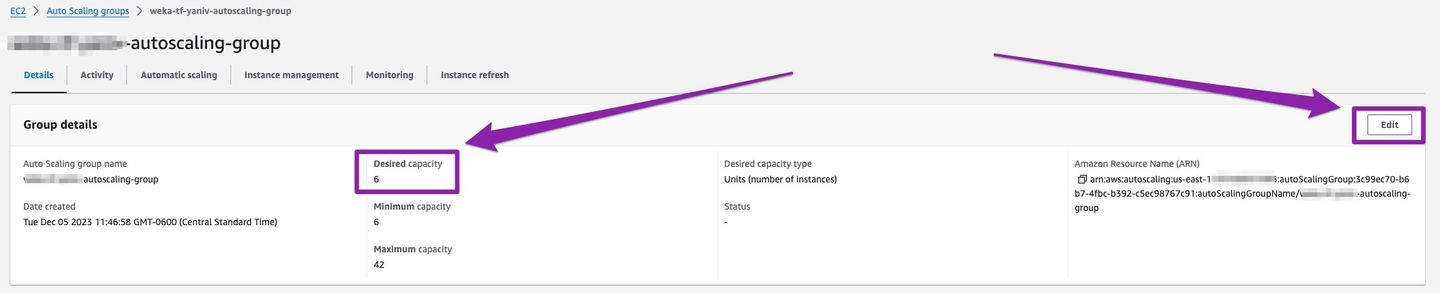

Navigate to the AutoScaling Group page in the AWS Management Console.

Select Edit to adjust the desired capacity.

Set the capacity to your preferred cluster size (for example, increase from 6 to 10 servers).

Select Update to save the updated settings to initiate scaling operations.

Test WEKA cluster self-healing functionality

Testing the self-healing functionality of a WEKA cluster involves decommissioning an existing instance and observing whether the Auto Scaling Group (ASG) automatically replaces it with a new instance. This process verifies the cluster’s ability to maintain capacity and performance despite instance failures or terminations.

Procedure

Identify the old instance: Determine the EC2 instance you want to decommission. Selection criteria may include age, outdated configurations, or specific maintenance requirements.

Verify auto-scaling configuration: Ensure your Auto Scaling Group is configured with a minimum of 7 instances (or more) and that the desired capacity is set to maintain the appropriate number of instances in the cluster.

Terminate the old instance: Use the AWS Management Console, AWS CLI, or SDKs to manually terminate the selected EC2 instance. This action prompts the ASG to initiate the replacement process.

Monitor auto-scaling activities: Track the ASG’s activities through the AWS Console or AWS CloudWatch. Confirm that the ASG recognizes the terminated instance and begins launching a new instance.

Verify the new instance: After it is launched, ensure it passes all health checks and integrates successfully into the cluster, maintaining overall cluster capacity.

Check load balancer:

If a load balancer is part of your setup, verify that it detects and registers the new instance to ensure proper load distribution across the cluster.

Review auto-scaling logs: Examine CloudWatch logs or auto-scaling events for any issues or errors related to terminating the old instance and introducing the new one.

Document and monitor: Record the decommissioning process and continuously monitor the cluster to confirm that it operates smoothly with the new instance.

APPENDICES

Appendix A: Security Groups / network ACL ports

Appendix B: Terraform’s required permissions examples

The minimum IAM Policies needed are based on the assumption that the network, including VPC, subnets, VPC Endpoints, and Security Groups, is created by the end user. If IAM roles or policies are pre-established, some permissions may be omitted.

The policy exceeds the 6144 character limit for IAM Policies, necessitating its division into two separate policies.

In each policy, replace the placeholders, such as account-number, prefix, and cluster-name, with the corresponding actual values.

Parameters:

DynamoDB: Full access is granted as your setup requires creating and managing DynamoDB tables.

Lambda: Full access is needed for managing various Lambda functions mentioned.

State Machine (AWS Step Functions): Full access is given for managing state machines.

Auto Scaling Group & EC2 Instances: Permissions for managing Auto Scaling groups and EC2 instances.

Application Load Balancer (ALB): Required for operations related to load balancing.

CloudWatch: Necessary for monitoring and managing CloudWatch rules and metrics.

Secrets Manager: Access for managing secrets in AWS Secrets Manager.

IAM:

PassRoleandGetRoleare essential for allowing resources to assume specific roles.KMS: Permissions for Key Management Service, assuming you use KMS for encryption.

Customization:

Resource Names and ARNs: Replace

"Resource": "*"with specific ARNs for your resources to tighten security. Use specific ARNs for KMS keys as well.Region and Account ID: Replace

regionandaccount-idwith your AWS region and account ID.Key ID: Replace

key-idwith the ID of the KMS key used in your setup.

Important notes:

This is a broad policy for demonstration. It's recommended to refine it based on your actual resource usage and access patterns.

You may need to add or remove permissions based on specific requirements of your Terraform module and AWS environment.

Testing the policy in a controlled environment before applying it to production is advisable to ensure it meets your needs without overly restricting or exposing your resources.

Appendix C: IAM Policies required by WEKA

The following policies are essential for all components to function on AWS. Terraform automatically creates these policies as part of the automation process. However, you also have the option to create and define them manually within your Terraform modules.

Last updated